1.5.6 Graph Partition

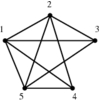

INPUT OUTPUT

Input Description:

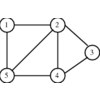

A (weighted) graph G=(V,E).

Integers j, k, and m.

Problem:

Partition the vertices into m subsets such that each subset

has size at most j, while the cost of the edges spanning subsets

is bounded by k.

Excerpt from

The Algorithm Design Manual:

Graph partitioning arises as a preprocessing step to divide-and-conquer algorithms, where it is often a good idea

to break things into roughly equal-sized pieces. It also arises when dealing with extremely large graphs, when we

need to cluster the vertices into logical components for storage (to improve virtual memory performance) or for

drawing purposes (to collapse dense subgraphs into single nodes in order to reduce cluttering).

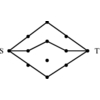

The basic approach to dealing with graph partitioning or max-cut problems is to construct an initial partition of

the vertices (either randomly or according to some problem-specific strategy) and then sweep through the vertices,

deciding whether the size of the cut would increase or decrease if we moved this vertex over to the other side.

The decision to move v can be made in time proportional to its degree by simply counting whether more of

v's neighbors are on the same team as v or not. Of course, the desirable side for $v$ will change if

many of its neighbors jump, so multiple passes are likely to be needed before the process converges on a local optimum.

Even such a local optimum can be arbitrarily far away from the global max-cut.

Recommended Books

Related Problems

This page last modified on 2008-07-10

.

www.algorist.com

Spectral Graph Theory

Spectral Graph Theory