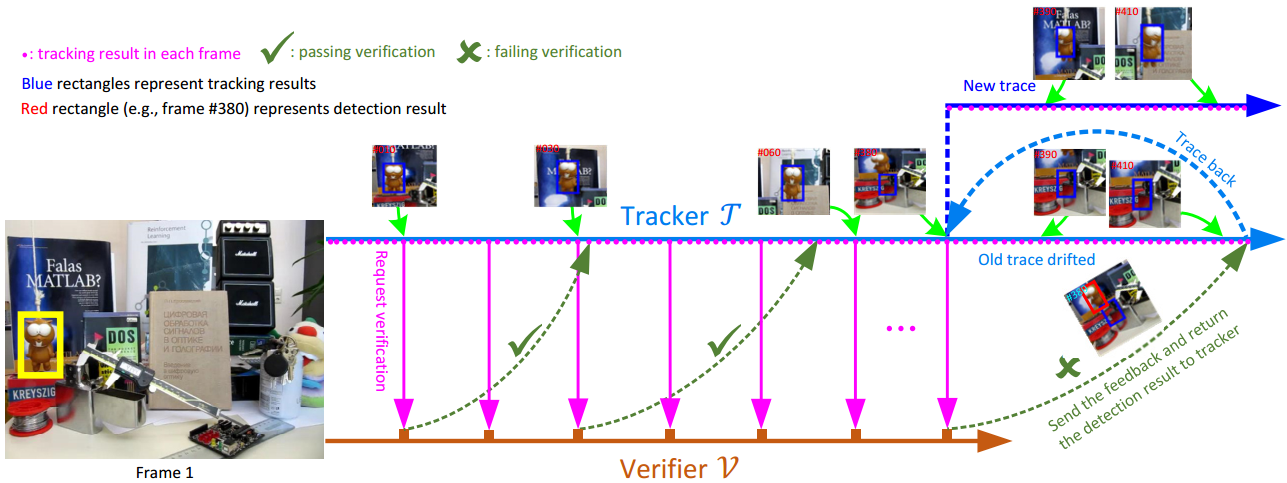

Figure 1. Illustration of the PTAV framework in which tracking and verifying are processed in two parallel asynchronous threads.

Figure 1. Illustration of the PTAV framework in which tracking and verifying are processed in two parallel asynchronous threads.

Being intensively studied, visual tracking has seen great recent advances in either speed (e.g., with correlation filters) or accuracy (e.g., with deep features). Real-time and high accuracy tracking algorithms, however, remain scarce. In this paper we study the problem from a new perspective and present a novel parallel tracking and verifying (PTAV) framework, by taking advantage of the ubiquity of multi-thread techniques and borrowing from the success of parallel tracking and mapping in visual SLAM. The proposed PTAV framework typically consists of two components, a tracker T and a verifier V, working in parallel on two separate threads. The tracker T aims to provide a super real-time tracking inference and is expected to perform well most of the time; by contrast, the verifier V checks the tracking results and corrects T when needed. The key innovation is that, V does not work on every frame but only upon the requests from T; on the other end, T may adjust the tracking according to the feedback from V. With such collaboration, PTAV enjoys both the high efficiency provided by T and the strong discriminative power by V. In our extensive experiments on popular benchmarks including OTB2013, OTB2015, TC128 and UAV20L, PTAV achieves the best tracking accuracy among all real-time trackers, and in fact performs even better than many deep learning based solutions. Moreover, as a general framework, PTAV is very flexible and has great rooms for improvement and generalization. Figure 1 illustrates the framework of proposed PTAV.

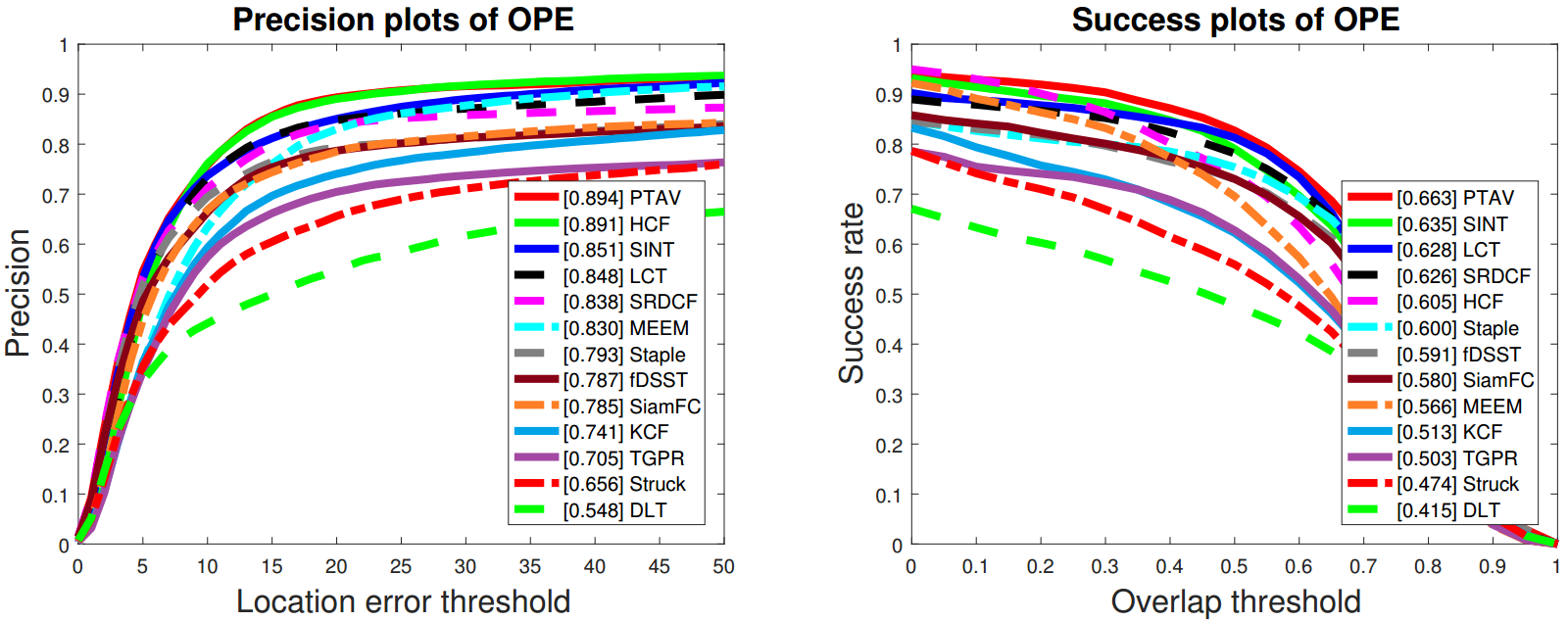

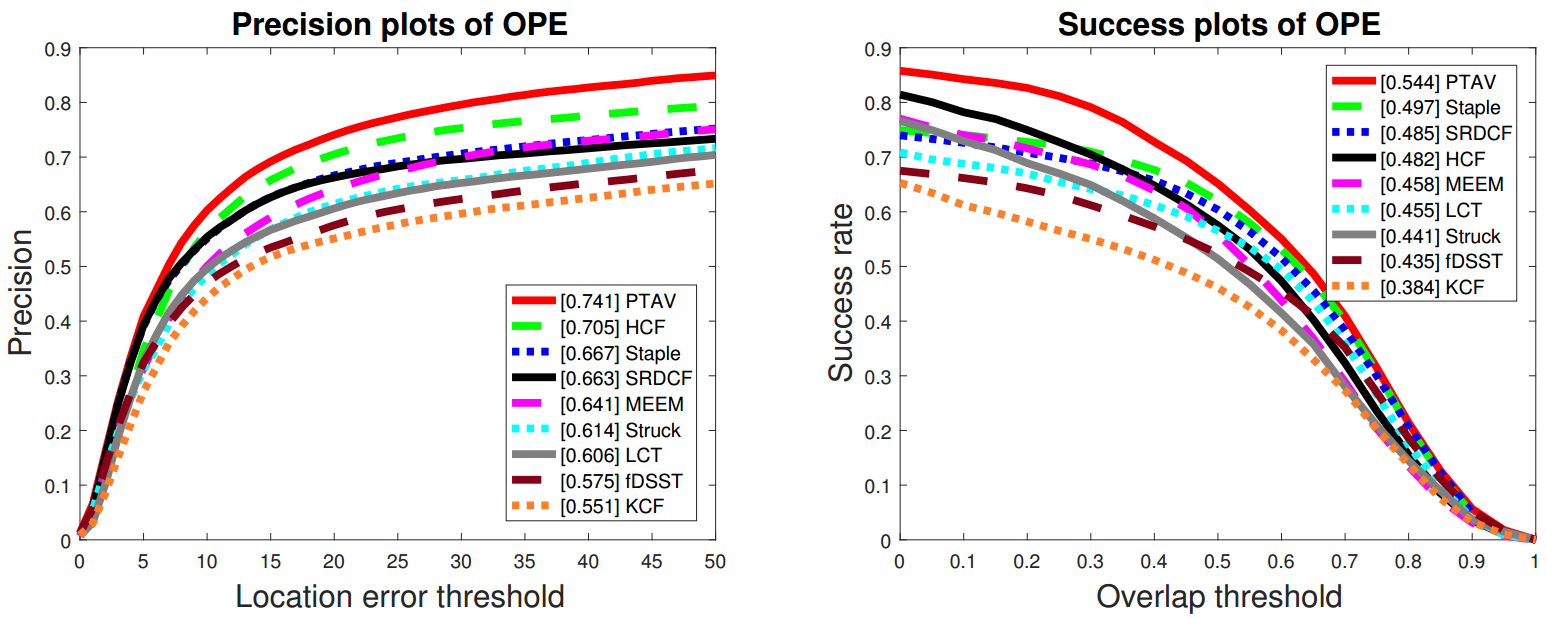

Comparison on OTB2013 |

Comparison on OTB2015 |

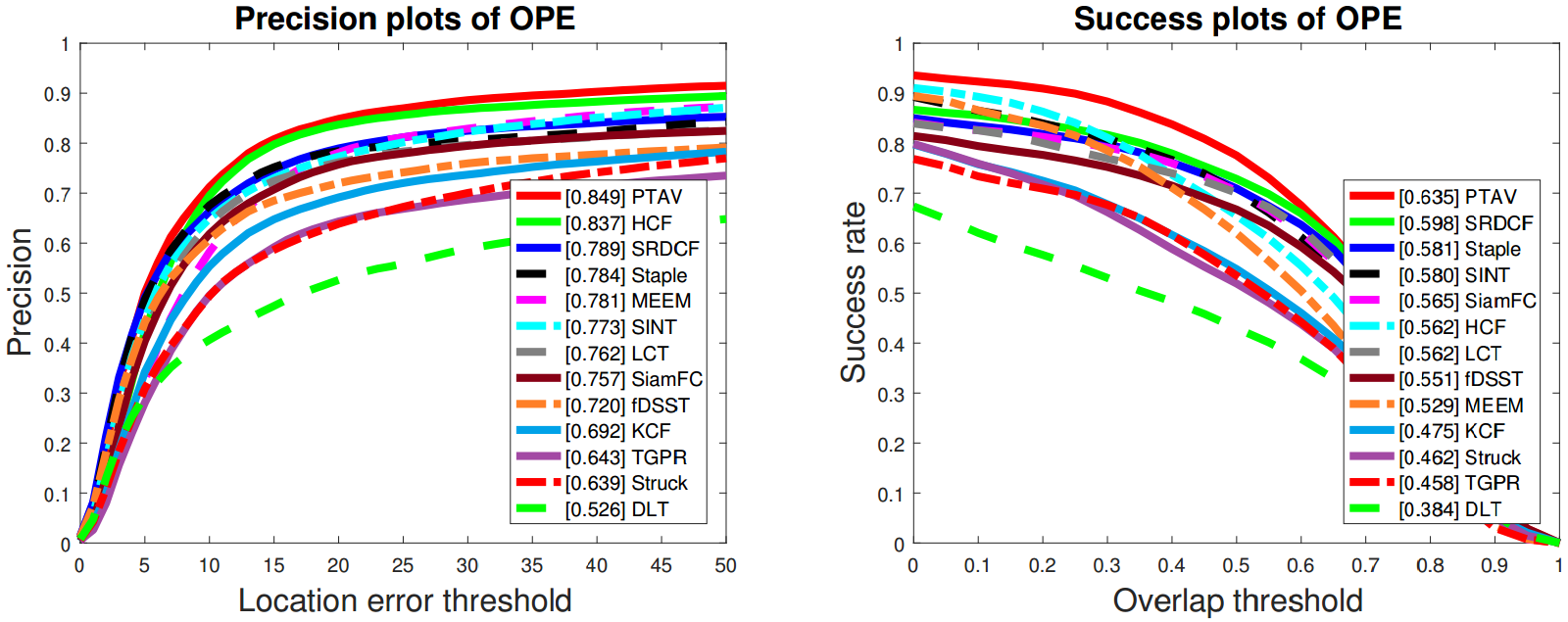

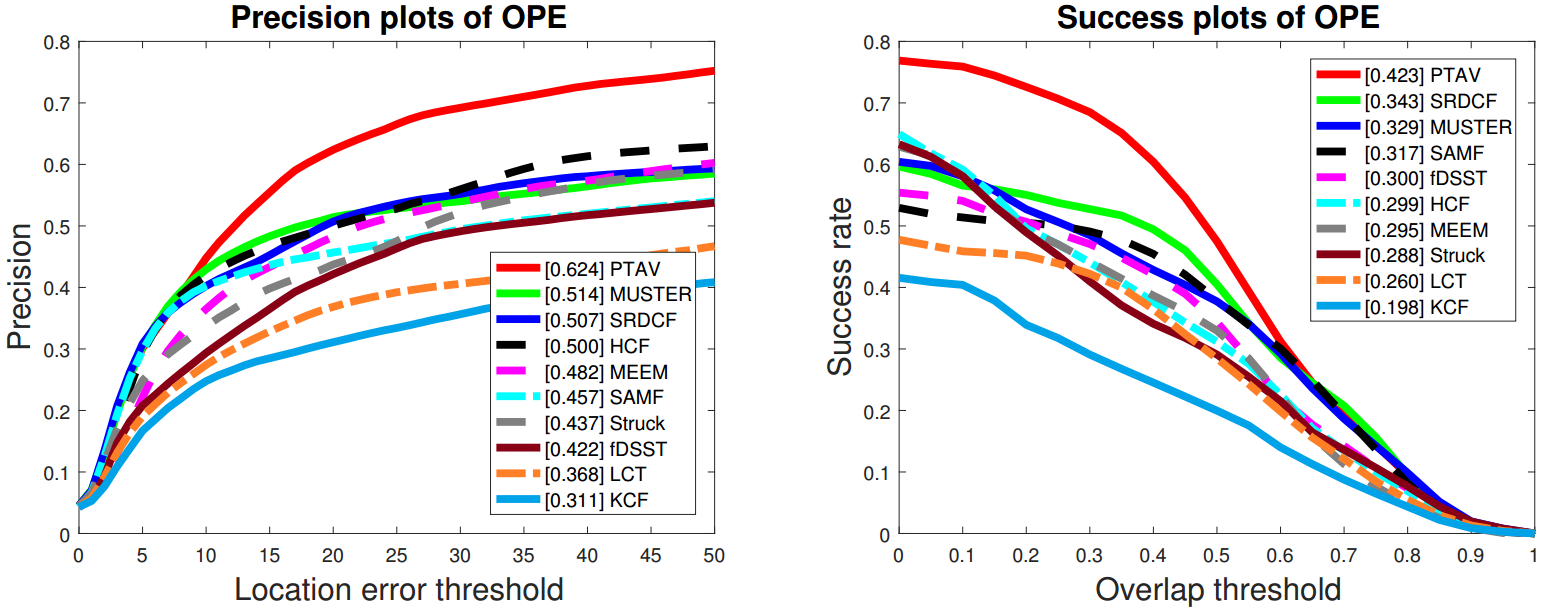

Comparison on TC128 |

Comparison on UAV20L |

Figure 2. The precision plots and success plots of OPE for the proposed tracker and other state-of-the-art methods on OTB2013, OTB2015, TC128 and UAV20L. The performance score for each tracker is shown in the legend. The performance score of precession plot is at error threshold of 20 pixels while the performance score of success plot is the AUC value. Best viewed on color display.

Heng Fan and Haibin Ling. Parallel Tracking and Verifying: A Framework for Real-Time and High Accuracy Visual Tracking. IEEE International Conference on Computer Vision (ICCV), 2017. [Paper][Supplementary Material][C++ Parallel Code][Matlab Serial Code (including siamese caffe model, ~800M)][Tracking Results]

[1] H. Fan and H. Ling. Parallel Tracking and Verifying: A Framework for Real-Time and High Accuracy Visual Tracking, In ICCV , 2017.

[2] R. Tao, E. Gavves, and A. W.M. Smeulders. Siamese Instance Search for Tracking, In CVPR , pp. 1420-1429, 2016.

[3] M. Danelljan, G. Hager, F. S. Khan, and M. Felsberg. Discriminative Scale Space Tracking, IEEE TPAMI, vol. 38, no. 9, pp. 1561-1575, 2017

[4] G. Klein and D. Murray. Parallel Tracking and Mapping for Small AR Workspaces, In ISMAR , pp. 225-234, 2007.

This software is free for use in research projects. If you publish results obtained using this software, please cite our paper. If you have any question, please feel free to contact Heng Fan (hengfan AT temple.edu). NOTE: by click the above downloading link, you have agreed that:

If you have any questions, please contact Heng Fan by hengfan AT temple.edu.