Integrating Humans and Computers for Image and Video Understanding

Visual imagery is powerful; it is a transformative force on a global scale. It has fueled the social reforms that are sweeping across the Middle East and Africa, and is sparking public debate in this country over questions of personal privacy. It is also creating unparalleled opportunities for people across the planet to exchange information, communicate ideas, and to collaborate in ways that would not have been possible even a few short years ago. Part of the power of visual imagery comes from the staggering number of images and videos available to the public via social media and community photo websites. With this explosive growth, however, comes the problem of searching for relevant content. Most search engines largely ignore the actual visual content of images, relying almost exclusively on associated text, which is often insufficient. In addition to the growing ubiquitousness of web imagery, we also notice another kind of visual phenomena, the proliferation of cameras viewing the user, from the ever present webcams peering out at us from our laptops, to cell phone cameras carried in our pockets wherever we go. This record of a user’s viewing behavior, particularly of their eye, head, body movements, or description, could provide enormous insight into how people interact with images or video, and inform applications like image retrieval. In this project our research goals are: 1) behavioral experiments to better understand the relationship between human viewers and imagery and 2) development of a human-computer collaborative system for image and video understanding, including subject-verb-object annotations, 3) implementation of retrieval, collection organization, and real world applications using our collaborative models.

People are usually the end consumers of visual imagery. Understanding what people recognize, attend to, or describe about an image is therefore a necessary goal for general image understanding. Toward this goal, we first address how people view and narrate images through behavioral experiments aimed at discovering the relationships between gaze, description, and imagery. Second, our methods integrate human input cues – gaze or description – with object and action recognition algorithms from computer vision to better align models of image understanding with how people interpret imagery. Our underlying belief is that humans and computers provide complimentary sources of information regarding the content of images and video. Computer vision algorithms can provide automatic indications of content through detection and recognition algorithms. These methods can inform estimates of “what” might be “where” in visual imagery, but will always be noisy. In contrast, humans can provide: passive indications of content through gaze patterns - where people look in images or video, or active indications of content through annotations. Finally, we expect that our proposed methods for human-computer collaborative applications will enable improved systems for search, organization, and interaction. (NSF).

- Publications:

Action Classification in Still Images Using Human Eye Movements, Gary L. Ge, Kiwon Yun, Dimitris Samaras, and Gregory J. Zelinsky, The 2nd Vision Meets Cognition Workshop at Conference on Computer Vision and Pattern Recognition, CVPR 2015, Boston/USA

Action Classification in Still Images Using Human Eye Movements, Gary L. Ge, Kiwon Yun, Dimitris Samaras, and Gregory J. Zelinsky, The 2nd Vision Meets Cognition Workshop at Conference on Computer Vision and Pattern Recognition, CVPR 2015, Boston/USA

[ BibTex ]

How We Look Tells Us What We Do: Action Recognition Using Human Gaze, Kiwon Yun, Gary L. Ge, Dimitris Samaras, and Gregory J. Zelinsky, Visual Science Society (VSS) 2015, Florida/USA

How We Look Tells Us What We Do: Action Recognition Using Human Gaze, Kiwon Yun, Gary L. Ge, Dimitris Samaras, and Gregory J. Zelinsky, Visual Science Society (VSS) 2015, Florida/USA

[ BibTex ]

Studying Relationships Between Human Gaze, Description, and Computer Vision, Kiwon Yun, Yifan Peng, Dimitris Samaras, Gregory J. Zelinsky, and Tamara L. Berg, Computer Vision and Pattern Recognition (CVPR) 2013 (Oregon/USA)

Studying Relationships Between Human Gaze, Description, and Computer Vision, Kiwon Yun, Yifan Peng, Dimitris Samaras, Gregory J. Zelinsky, and Tamara L. Berg, Computer Vision and Pattern Recognition (CVPR) 2013 (Oregon/USA)

Exploring the Role of Gaze Behavior and Object Detection in Scene Understanding, Kiwon Yun, Yifan Peng, Dimitris Samaras, Gregory J. Zelinsky and Tamara L. Berg, Frontiers in Psychology, December 2013, 4(917): 1-14

Exploring the Role of Gaze Behavior and Object Detection in Scene Understanding, Kiwon Yun, Yifan Peng, Dimitris Samaras, Gregory J. Zelinsky and Tamara L. Berg, Frontiers in Psychology, December 2013, 4(917): 1-14

Specifying the Relationships Between Objects, Gaze, and Descriptions for Scene Understanding, Kiwon Yun, Yifan Peng, Hossein Adeli, Tamara L. Berg, Dimitris Samaras, and Gregory J. Zelinsky, Visual Science Society (VSS) 2013 (Florida/USA)

Specifying the Relationships Between Objects, Gaze, and Descriptions for Scene Understanding, Kiwon Yun, Yifan Peng, Hossein Adeli, Tamara L. Berg, Dimitris Samaras, and Gregory J. Zelinsky, Visual Science Society (VSS) 2013 (Florida/USA)

- Students:

- Kiwon Yun, Zijun Wei

- Former students:

- Yifan Peng

- Funding: NSF

- Dataset download: [Click here]

- More detail can be found here.

Gaze Behavior and Object Detection in Scene Understanding

[publications]

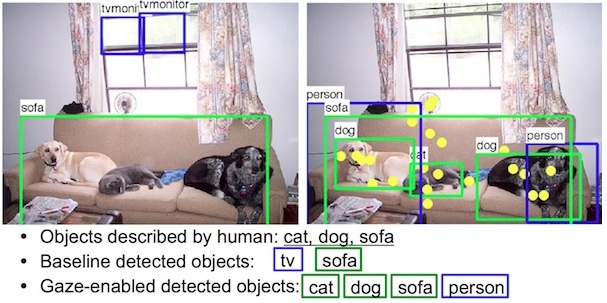

We posit that user behavior during natural viewing of images contains an abundance of information about the content of images as well as information related to user intent and user defined content importance. In this paper, we conduct experiments to better understand the relationship between images, the eye movements people make while viewing images, and how people construct natural language to describe images. We explore these relationships in the context of two commonly used computer vision datasets. We then further relate human cues with outputs of current visual recognition systems and demonstrate prototype applications for gaze-enabled detection and annotation.

Publications

Studying Relationships Between Human Gaze, Description, and Computer Vision, Kiwon Yun, Yifan Peng, Dimitris Samaras, Gregory J. Zelinsky, and Tamara L. Berg, Computer Vision and Pattern Recognition (CVPR) 2013 (Oregon/USA)

[ BibTex ]

Studying Relationships Between Human Gaze, Description, and Computer Vision, Kiwon Yun, Yifan Peng, Dimitris Samaras, Gregory J. Zelinsky, and Tamara L. Berg, Computer Vision and Pattern Recognition (CVPR) 2013 (Oregon/USA)

[ BibTex ] Exploring the Role of Gaze Behavior and Object Detection in Scene Understanding, Kiwon Yun, Yifan Peng, Dimitris Samaras, Gregory J. Zelinsky and Tamara L. Berg, Frontiers in Psychology, December 2013, 4(917): 1-14

[ BibTex ]

Exploring the Role of Gaze Behavior and Object Detection in Scene Understanding, Kiwon Yun, Yifan Peng, Dimitris Samaras, Gregory J. Zelinsky and Tamara L. Berg, Frontiers in Psychology, December 2013, 4(917): 1-14

[ BibTex ] Specifying the Relationships Between Objects, Gaze, and Descriptions for Scene Understanding, Kiwon Yun, Yifan Peng, Hossein Adeli, Tamara L. Berg, Dimitris Samaras, and Gregory J. Zelinsky, Visual Science Society (VSS) 2013 (Florida/USA)

Specifying the Relationships Between Objects, Gaze, and Descriptions for Scene Understanding, Kiwon Yun, Yifan Peng, Hossein Adeli, Tamara L. Berg, Dimitris Samaras, and Gregory J. Zelinsky, Visual Science Society (VSS) 2013 (Florida/USA)

More

- [ Dataset Download ]

- [ More Detail ]

How We Look Tells Us What We Do: Action Recognition Using Human Gaze

[publications]

Despite recent advances in computer vision, image categorization aimed at recognizing the semantic category of an image such as scene, objects or actions remains one of the most challenging tasks in the field. However, human gaze behavior can be harnessed to recognize different classes of actions for automated image understanding. To quantify the spatio-temporal information in gaze we use segments in each image (person, upper-body, lower-body, context) and derive gaze features, which include: number of transitions between segment pairs, avg/max of fixation-density map per segment, dwell time per segment, and a measure of when fixations were made on the person versus the context. We evaluate our gaze features on a subset of images from the challenging PASCAL VOC 2012 Action Classes dataset, while visual features using a Convolutional Neural Network are obtained as a baseline. Two support vector machine classifiers are trained, one with the gaze features and the other with the visual features. Although the baseline classifier outperforms the gaze classifier for classification of 10 actions, analysis of classification results over reveals four behaviorally meaningful action groups where classes within each group are often confused by the gaze classifier. When classifiers are retrained to discriminate between these groups, the performance of the gaze classifier improves significantly relative to the baseline. Furthermore, combining gaze and the baseline outperforms both gaze alone and the baseline alone, suggesting both are contributing to the classification decision and illustrating how gaze can improve state of the art methods of automated action classification.

Publications

Action Classification in Still Images Using Human Eye Movements, Gary L. Ge, Kiwon Yun, Dimitris Samaras, and Gregory J. Zelinsky, The 2nd Vision Meets Cognition Workshop at Conference on Computer Vision and Pattern Recognition, CVPR 2015, Boston/USA

Action Classification in Still Images Using Human Eye Movements, Gary L. Ge, Kiwon Yun, Dimitris Samaras, and Gregory J. Zelinsky, The 2nd Vision Meets Cognition Workshop at Conference on Computer Vision and Pattern Recognition, CVPR 2015, Boston/USA

[ BibTex ]

How We Look Tells Us What We Do: Action Recognition Using Human Gaze, Kiwon Yun, Gary L. Ge, Dimitris Samaras, and Gregory J. Zelinsky, Visual Science Society (VSS) 2015, Florida/USA

How We Look Tells Us What We Do: Action Recognition Using Human Gaze, Kiwon Yun, Gary L. Ge, Dimitris Samaras, and Gregory J. Zelinsky, Visual Science Society (VSS) 2015, Florida/USA

[ BibTex ]

More

- [ More Detail ]