EyeOpener: Editing Eyes in the Wild |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Zhixin Shu1 | Eli Shechtman2 | Dimitris Samaras1 | Sunil Hadap2 | ||

|

1Stony Brook University |

2Adobe Research |

||||

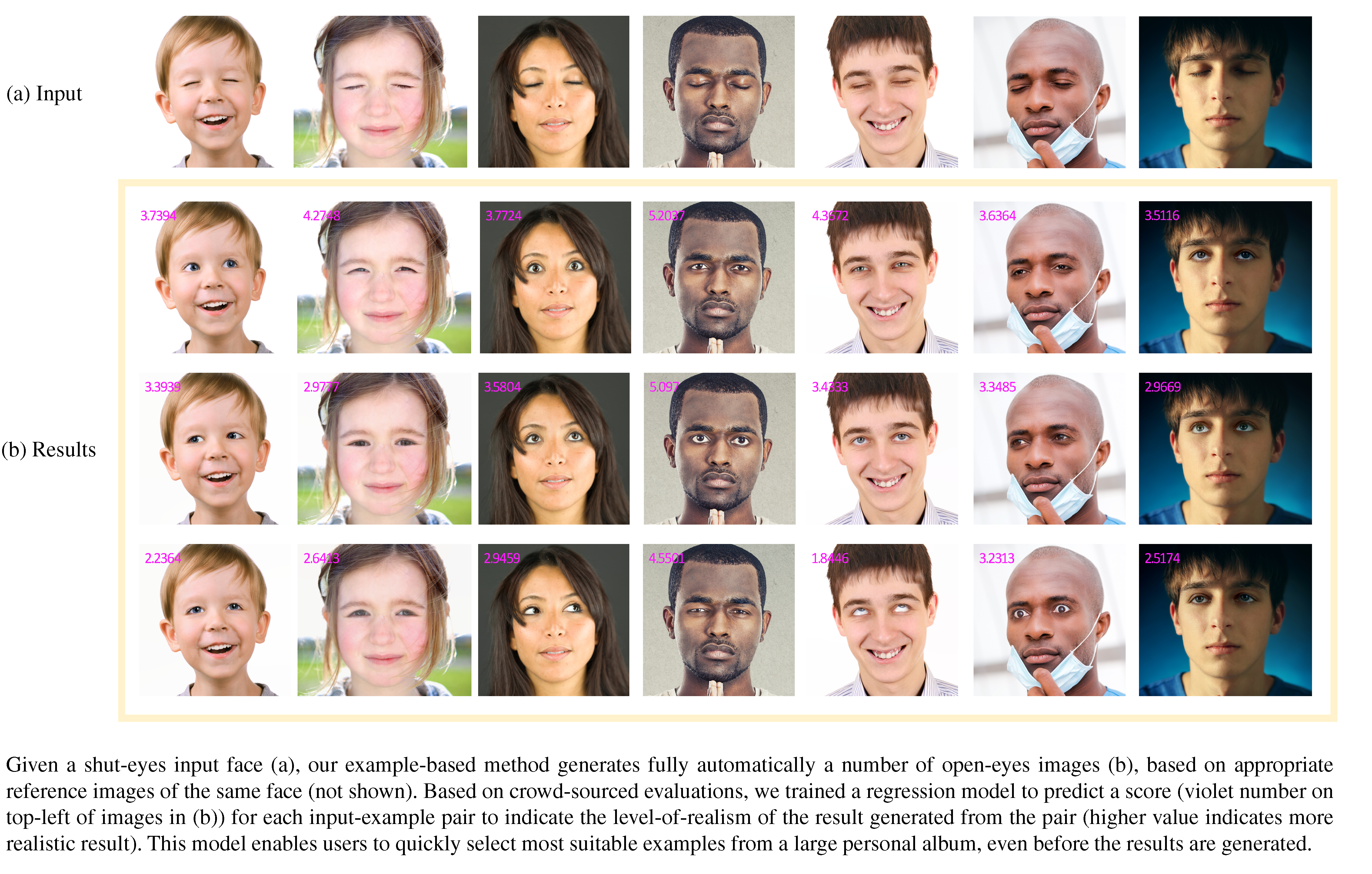

Abstract Closed eyes and look-aways can ruin precious moments captured in photographs. In this paper, we present a new framework for automatically editing eyes in photographs. We leverage a user’s personal photo collection to find a “good” set of reference eyes, and transfer them onto a target image. Our example-based editing approach is robust and effective for realistic image editing. A fully automatic pipeline for realistic eye-editing is challenging due to the unconstrained conditions under which the face appears in a typical photo collection. We use crowd-sourced human evaluations to understand the aspects of the target-reference image pair that will produce the most realistic results. We subsequently train a model that automatically selects the top-ranked reference candidate(s) by narrowing the gap in terms of pose, local contrast, lighting conditions and even expressions. Finally, we develop a comprehensive pipeline of 3D face estimation, image warping, relighting, image harmonization, automatic segmentation and image compositing in order to achieve highly believable results. We evaluate the performance of our method via quantitative and crowd-sourced experiments. Paper EyeOpener: Editing Eyes in the Wild. Zhixin Shu, Eli Shechtman, Dimitris Samaras, and Sunil Hadap, ACM Transactions on Graphics (ToG). (pdf) Supplementary Material Supplemental Document (pdf, 30 MB) Dataset (coming soon.) Code and realism score regression model (coming soon.) Acknowledgement The research was partially supported by a gift from the Adobe Systems Inc., by NSF: IIS-1161876, and the SUBSAMPLE project of the DIGITEO Institute France. We would like to acknowledge Jianchao Yang and Kalyan Sunkavalli for helpful discussions. |