Interaction Design

SHOWCASE

|

|

|

|

"BUGZ" Eddie Luo |

"Othello" Chih Ming Chen |

"Soldier Puzzle" Amy Jen & Robert Chung |

"Chinese Chess" Mike Chia & Karen Chau |

|

|

|

|

"SimpleCircuit" Corey Grimes |

"Flash Tracker" James Sullivan |

"Duck Hunt" Chen-Yo Pao |

"Star Wars" Long Tran |

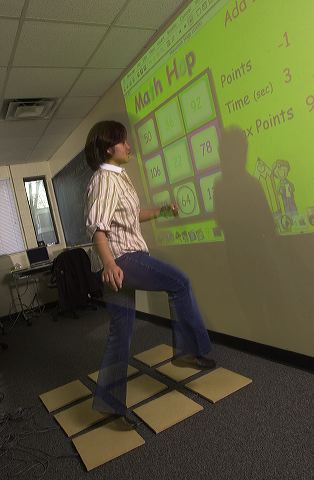

SmartStep: A Physical Computing Interface to Drill and Assess Basic Math Skills

|

Students: Interface design by Mike Chen CIT conference abstract: |

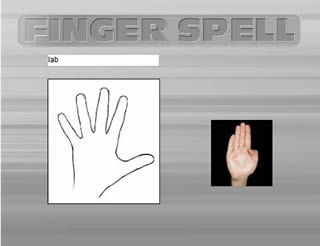

FingerSpell: A Physical Computing Interface to Translate ASL Gestures into Synthesized Speech

|

Students: CIT conference abstract: |

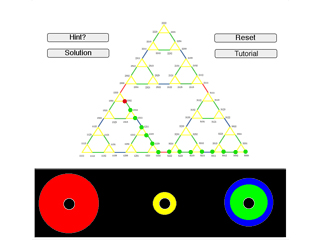

Tower of Hanoi: A Computer Vision Application to Teach Advanced Math Concepts

|

Students: URECA abstract: In this project we designed a computer-mediated learning task based on the traditional mathematics puzzle, the Towers of Hanoi. Targeted at middle and high school students, the application will help them to learn advanced concepts, like recursion and graph coloring, as they physically try to solve the puzzle in real time, working in groups of two or three. Our approach involves the use of computer vision to track the location of puzzle pieces on a physical game board. Based on the state of board the application computes the optimal solution, and can provide audio/visual clues to the student, while explaining the principle behind the solution. Future work on the project involves the addition of a speech command interface, so students can query the application without having to shift their focus to a GUI. |