Visual

Analytics and Imaging Laboratory (VAI Lab) Computer Science Department, Stony Brook University, NY |

|

Visual

Analytics and Imaging Laboratory (VAI Lab) Computer Science Department, Stony Brook University, NY |

|

Abstract: Several prominent studies have shown that the imbalanced on-screen exposure of observable phenotypic traits like gender and skin-tone in movies, TV shows, live telecasts, and other visual media can reinforce gender and racial stereotypes in society. Researchers and human rights organizations alike have long been calling to make media producers more aware of such stereotypes. While awareness among media producers is growing, balancing the presence of different phenotypes in a video requires substantial manual effort and can typically only be done in the post production phase. The task becomes even more challenging in the case of a live telecast where video producers must make instantaneous decisions with no post-production phase to refine or revert a decision. In this paper, we propose Screen-Balancer, an interactive tool that assists media producers in balancing the presence of different phenotypes in a live telecast.

The design of Screen-Balancer is informed by a field study conducted in a professional live studio. Screen-Balancer analyzes the facial features of the actors to determine phenotypic traits using facial detection packages; it then facilitates real-time visual feedback for interactive moderation of gender and skin-tone distributions. To demonstrate the effectiveness of our approach, we conducted a user study with 20 participants and asked them to compose live telecasts from a set of video streams simulating different camera angles, and featuring several male and female actors with different skin-tones. The study revealed that the participants were able to reduce the difference of screen times of male and female actors by 43%, and that of light-skinned and dark-skinned actors by 44%, thus showing the promise and potential of using such a tool in commercial production systems.

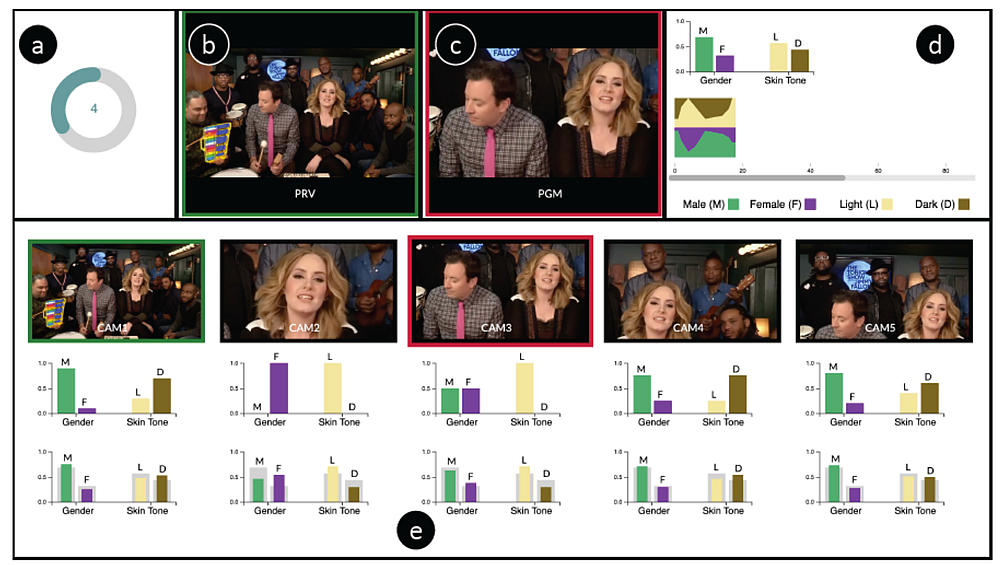

Teaser: Thescreenshot below shows the various components and the workflow of our tool:

The Components of Screen-Balancer: (a) a countdown timer showing when the charts and graphs will be updated next; (b) the preview stream, which video producers use to isolate a particular camera feed from (e) before selecting it for the output stream; (c) the output stream; (d) bar-charts and a stream graph showing the cumulative distribution and the timeline distribution of sensitive attributes (e.g., gender, skin-tone) in output stream until now; (e) input streams (e.g., cameras with different angles and views) along with one bar-chart and one bullet chart each, one showing screen-time of different genders and colors in that stream 10 seconds in advance, and another showing how choosing that particular stream will affect the overall screen-presence distribution of genders and colors in (d) in the next 10 seconds.

Video: Watch it to get a quick overview:

Paper: Md. N Hoque, S. Billah, N. Saquib, K. Mueller, “Toward Interactively Balancing the Screen Time of Actors Based on Observable Phenotypic Traits in Live Telecast,” ACM Conference on Computer-Supported Cooperative Work and Social Computing (CSCW), October 17-21, 2020. PDF