Transferring Color To Greyscale Images

Tomihisa Welsh, Michael Ashikhmin, Klaus Mueller

Abstract

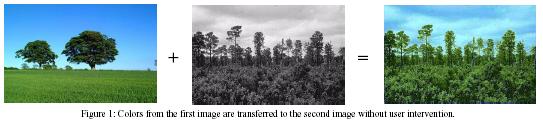

We introduce a general technique for “colorizing” greyscale images by transferring

color between a source, color image and a destination, greyscale image.

Although the general problem of adding chromatic values to a greyscale

image has no exact, objective solution, the current approach attempts to

provide a method to help minimize the amount of human labor required for

this task. Rather than choosing RGB colors from a palette to color

individual components, we transfer the entire color “mood” from the source

to the target image by matching luminance and texture information between

the images. We choose to transfer only chromaticity information and

retain the original luminance values of the target image. The procedure

is further enhanced by allowing the user to match areas of the two images

with rectangular swatches. We show that this simple technique can

be successfully applied to a variety of images and video provided that

texture and luminance are sufficiently distinct. The images we have

generated demonstrate the potential and utility of our technique in a diverse

set of application domains.

Full Paper to appear in SIGGRAPH

2002 (3.9 Mb)

Method

Our concept of transferring color from one image to another is inspired

by work by Reinhard et al. [CG&A Sept/Oct 2001] in which color is transferred

between two color images. In their work, colors from a source image are

transferred to a second colored image using a simple but surprisingly successful

procedure. Since both the source and target spaces have color, matching

involves 3 channels. However, greyscale images only contain one color

channel (luminance), so we can only match values between the luminance

channel of the source and target images. We use neighborhood statistics

to help guide the matching process. Once the best match is found,

we transfer only color value for that pixel and retain the original luminance

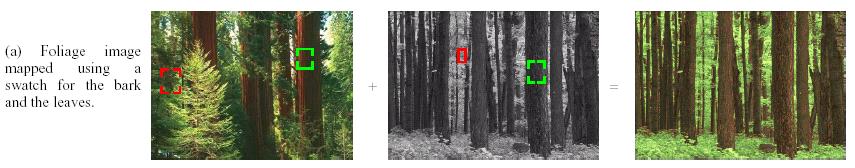

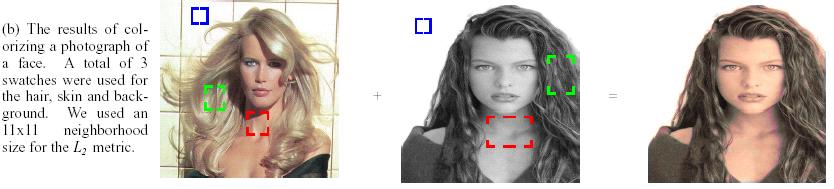

value of the target pixel. In difficult cases, a few swatches can

be used to aid the matching process between the source and the target image.

After color is transferred between the source and the target swatches,

the final colors are assigned to each pixel in the greyscale image by matching

each greyscale image pixel to a pixel in the target swatches using the

L2 distance metric. Thus, each pixel match is determined by matching it

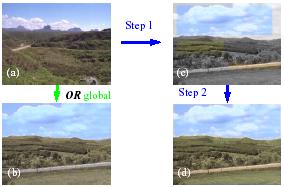

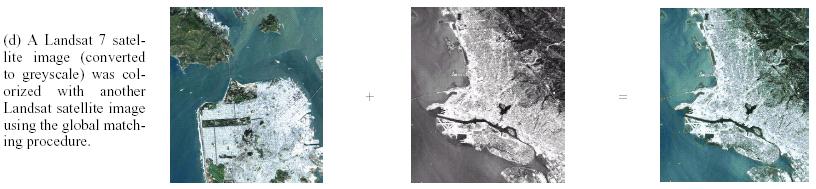

only to other pixels within the same image. Figure 2 shows the basic

idea.

|

Figure 2: The two variations of the algorithm. (a) Source color image.

(b) Result of basic, global algorithm applied (no swatches). (c) Greyscale

image with swatch colors transferred from Figure 2a. (d) Result using swatches. |

Results

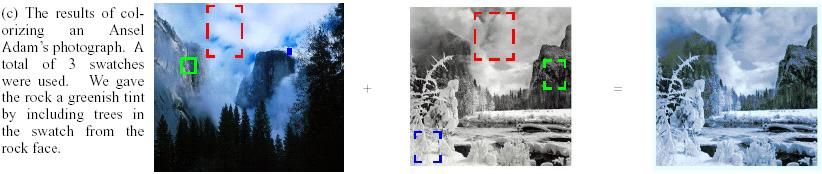

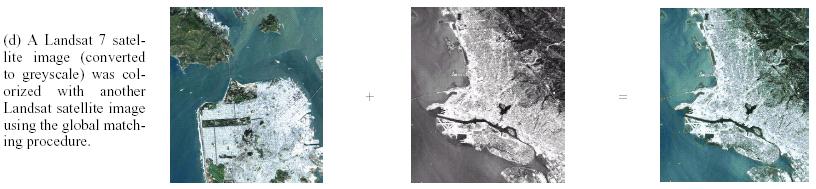

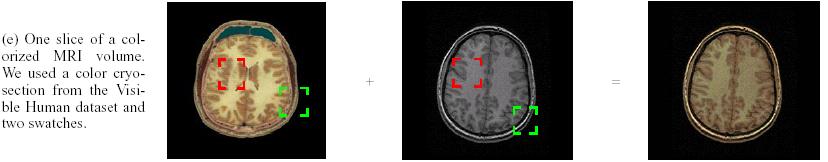

Figure 3:

Please Note:

Figure 1: Source courtesy (c) Ian Britton - FreeFoto.com

Figure 3a: Images courtesy of Adam Superchi and Philip Greenspun.

Figure 3c: The Ansel Adams photograph was orginally commissioned by

the Department of the Interior. The source image is courtesy of Paul

Kienitz.

Figure 3f: The SEM photograph is courtesy of Scott Chumbley.

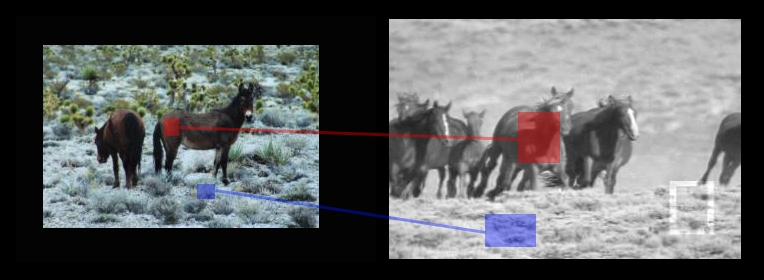

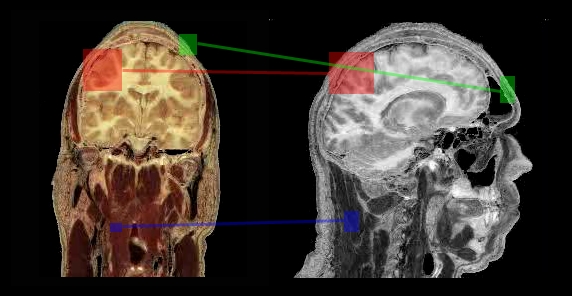

Video

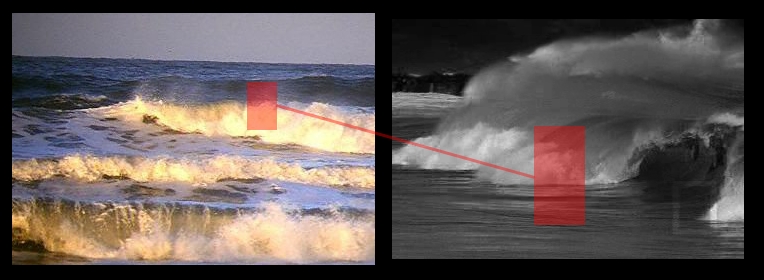

For all three movie clips, we used swatches to colorize a single

frame in the movie sequence. Then the colorized target swatch for

the frame was used as the source samples for all other frames in the movie

sequence. Note, the original target movie clips were color but turned

to greyscale for demonstration purposes.

Waves

View MPEG Movie

Video courtesy of National Geographic.

Horses

View MPEG video clip

Video courtesy of National Geographic.

Brain Volume

This brain volume was originally from the Visible Human dataset.

The movie was obtained at http://www.cs.adelaide.edu.au.

View MPEG video clip

![]()