Planar Object Tracking in the Wild: A Benchmark

Abstract

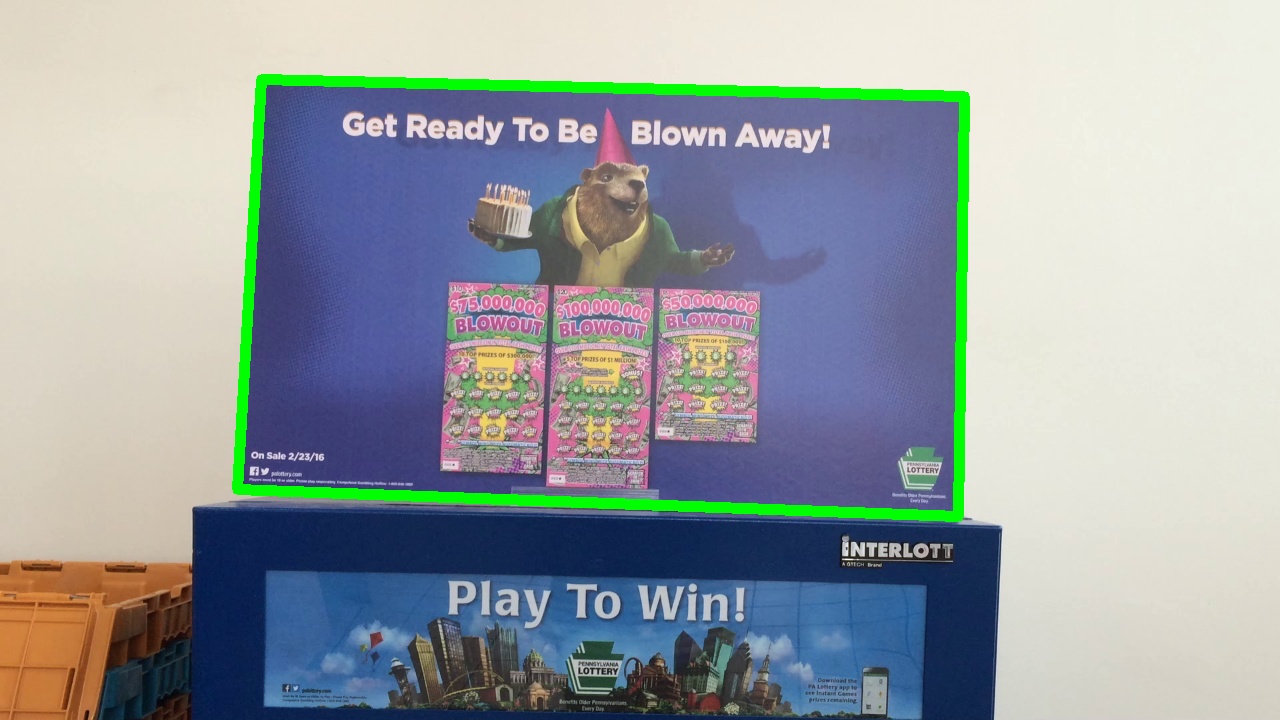

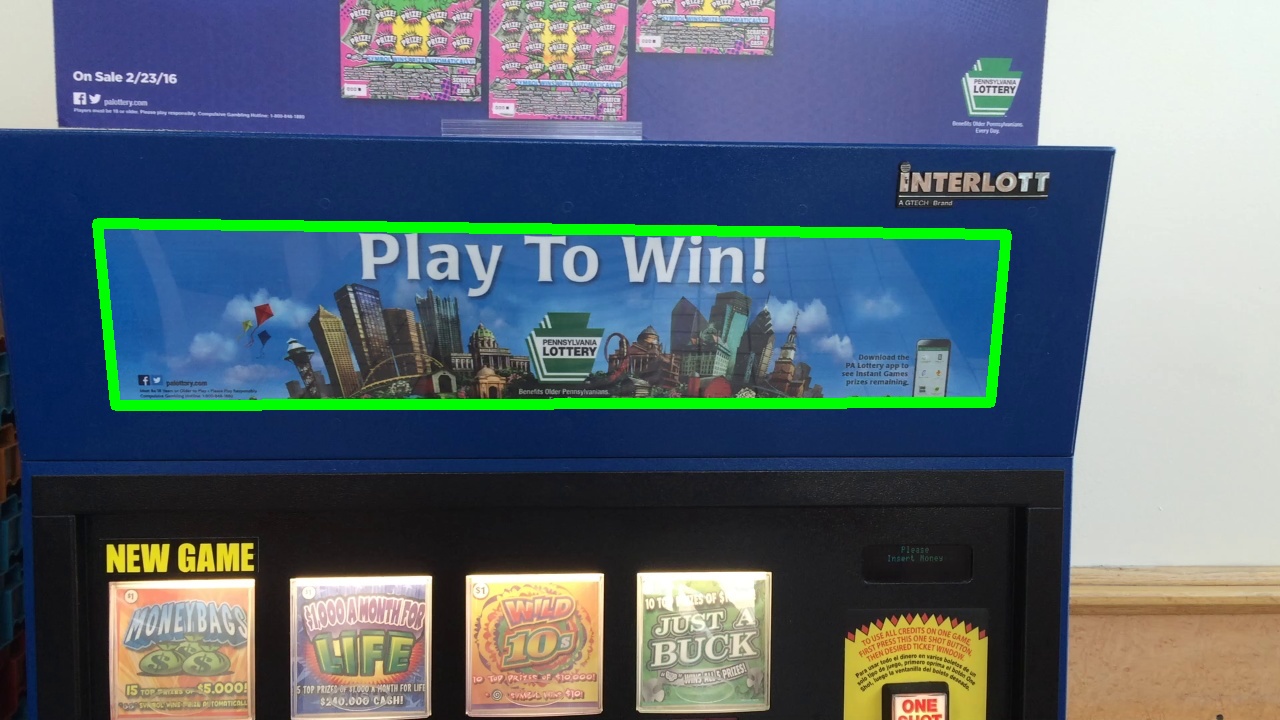

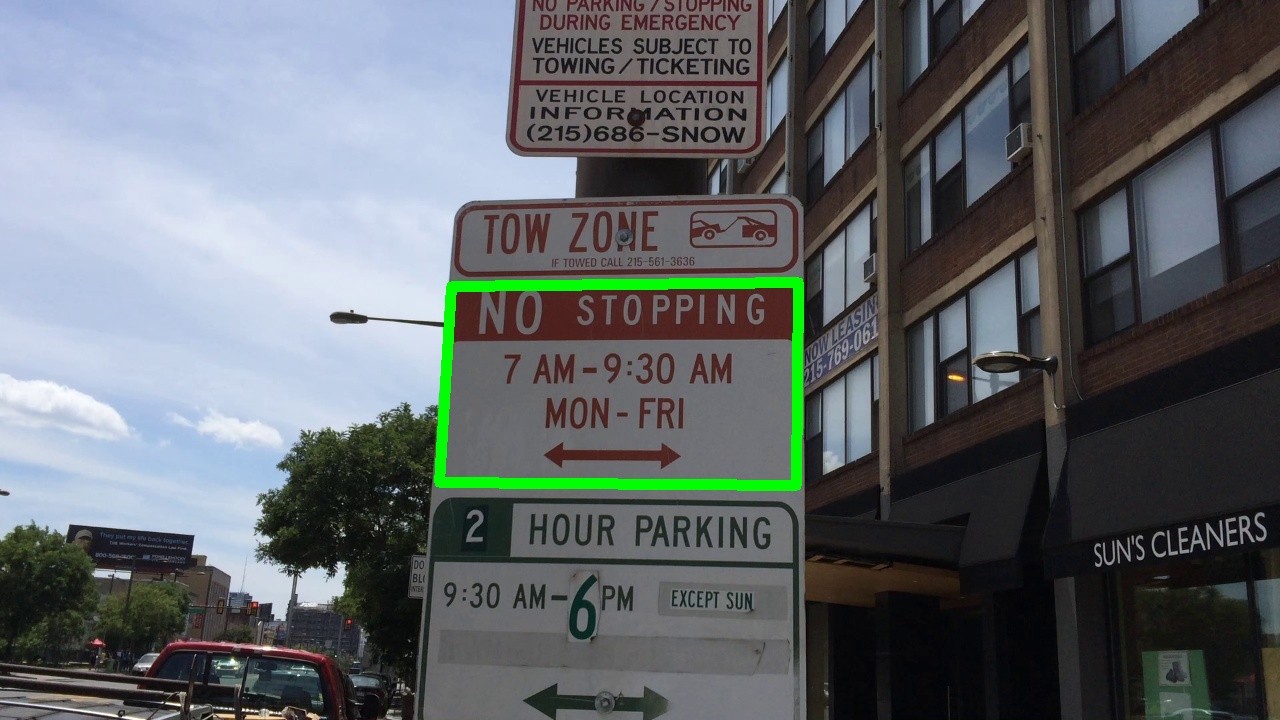

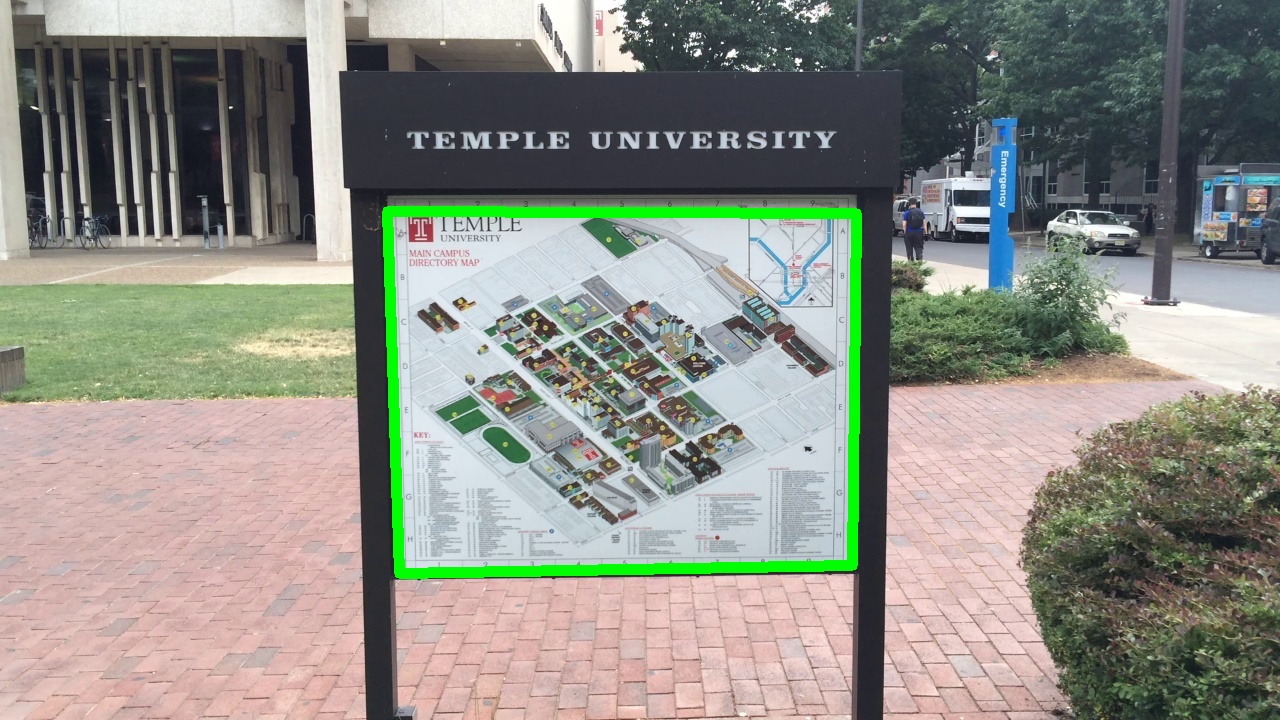

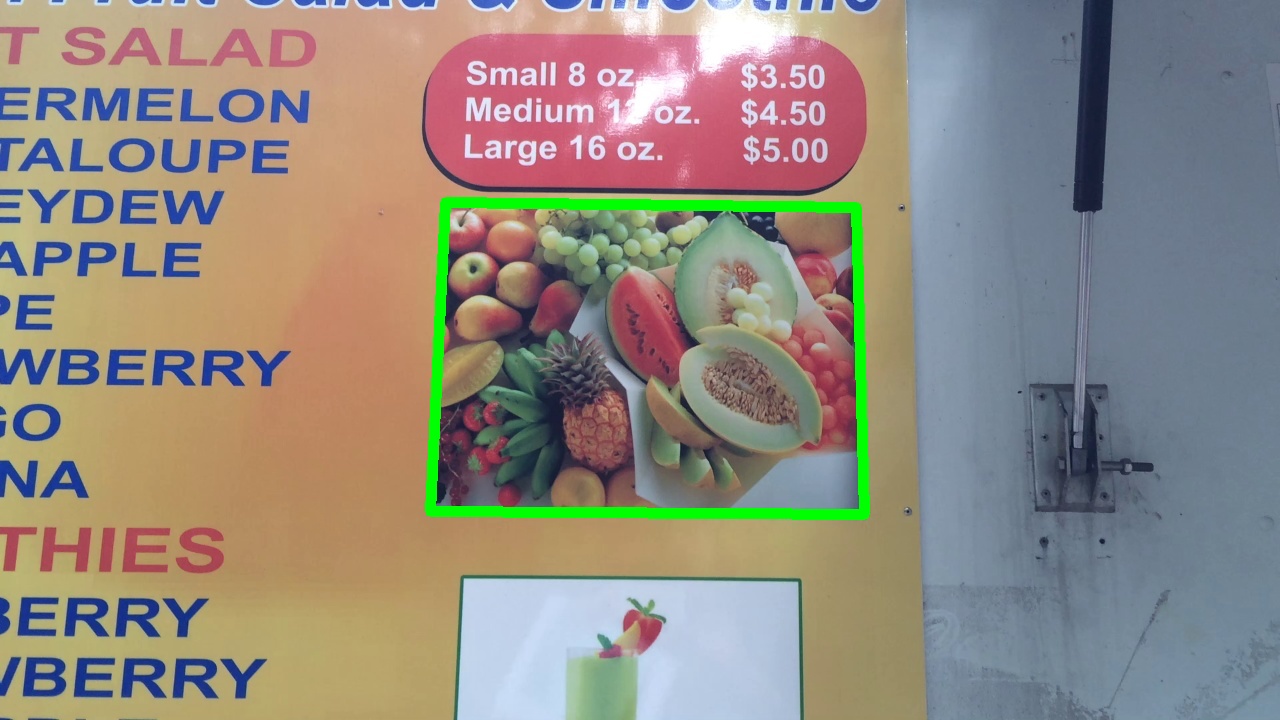

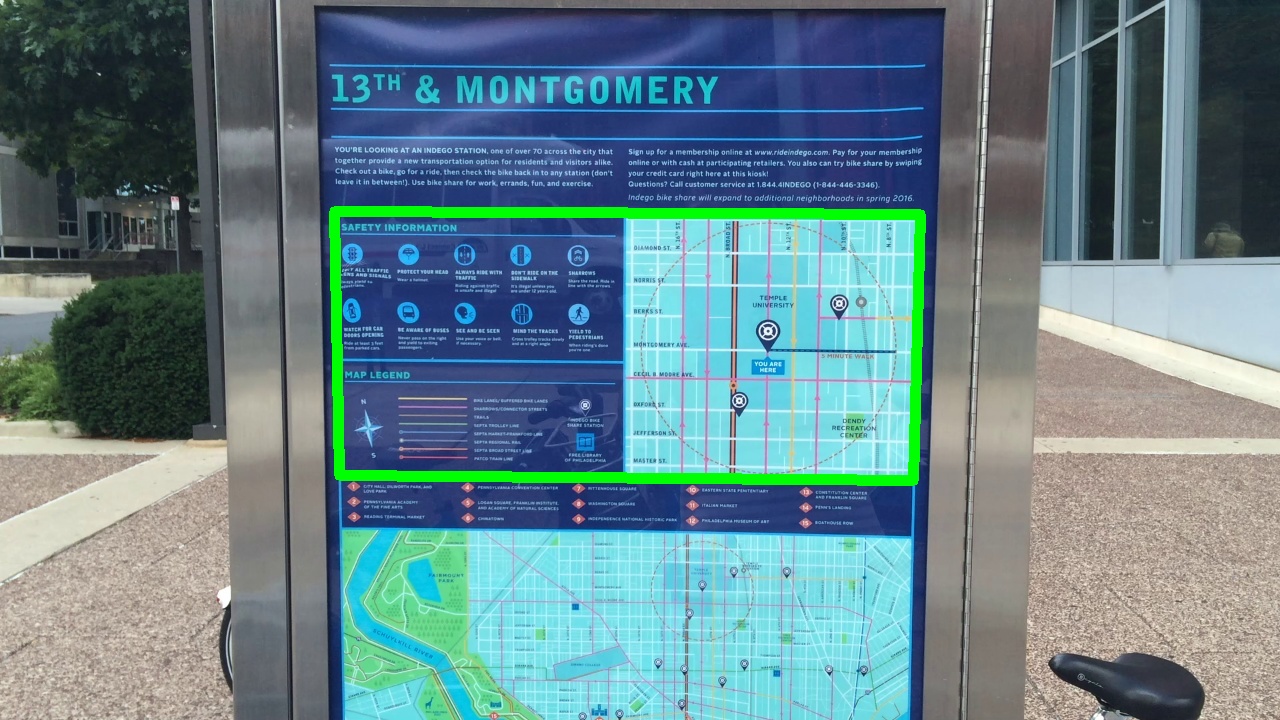

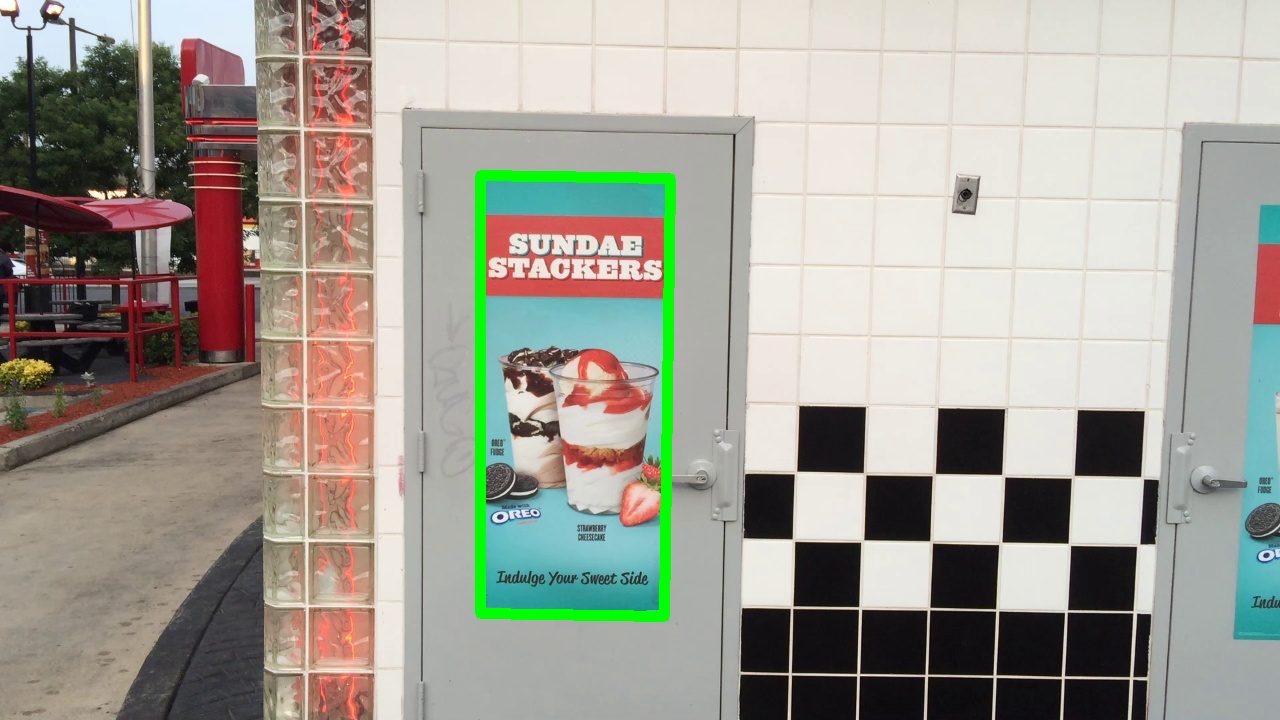

Planar object tracking is an actively studied problem in vision-based robotic applications. While several benchmarks have been constructed for evaluating state-of-theart algorithms, there is a lack of video sequences captured in the wild rather than in constrained laboratory environment. In this paper, we present a carefully designed planar object tracking benchmark containing 210 videos of 30 planar objects sampled in the natural environment. In particular, for each object, we shoot seven videos involving various challenging factors, namely scale change, rotation, perspective distortion, motion blur, occlusion, out-of-view, and unconstrained. The ground truth is carefully annotated semi-manually to ensure the quality. Moreover, eleven state-of-the-art algorithms are evaluated on the benchmark using two evaluation metrics, with detailed analysis provided for the evaluation results. We expect the proposed benchmark to benefit future studies on planar object tracking.Reference

-

Planar Object Tracking in the Wild: A Benchmark

P. Liang, Y. Wu, H. Lu, L. Wang, C. Liao, and H. Ling.

Proc. of IEEE Int'l Conference on Robotics and Automation (ICRA), 2018

PDF

Dataset

All the 210 sequences of the dataset can be downloaded separately for each object using the following links. The groud truth is available at download.Evaluation

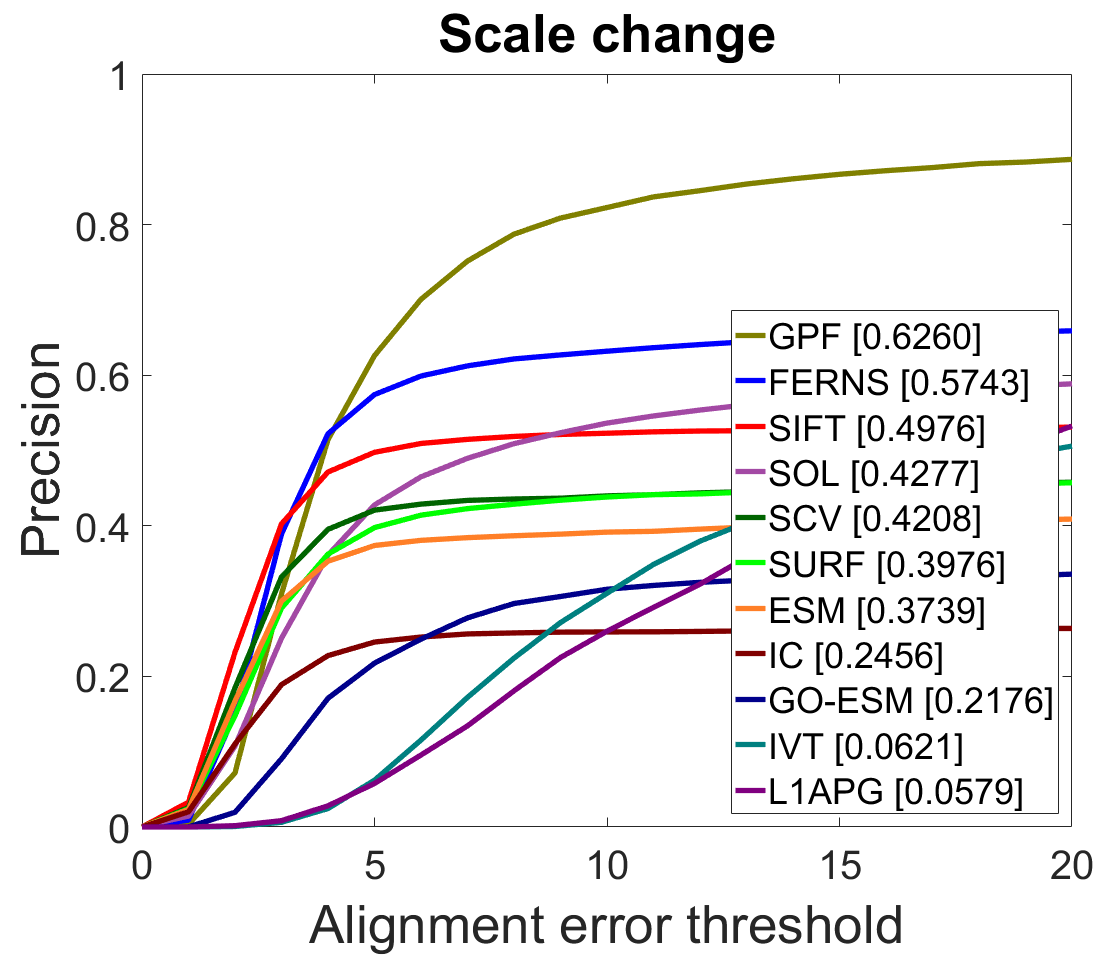

All the evaluation results and the Matlab code for generating the following figures are available at download.

|

|

|

|

|

|

|

|

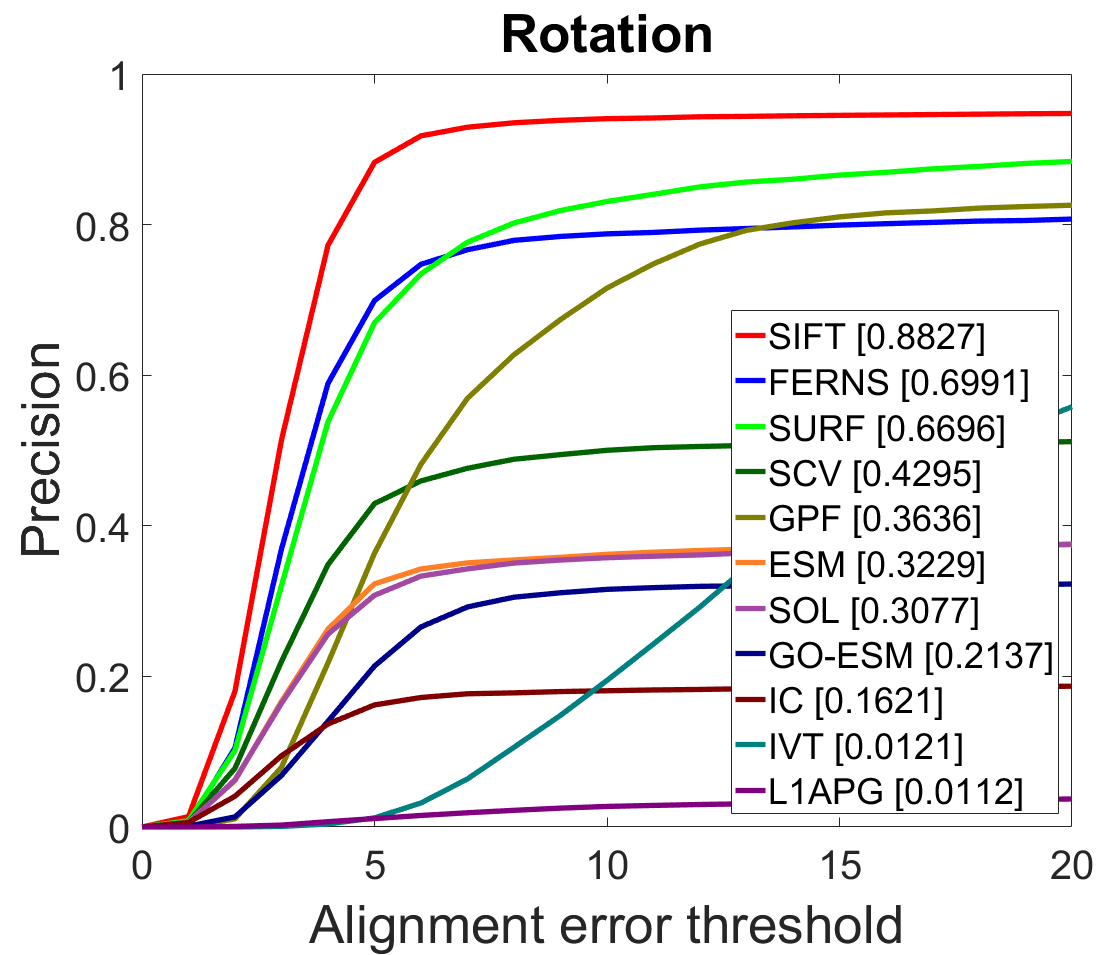

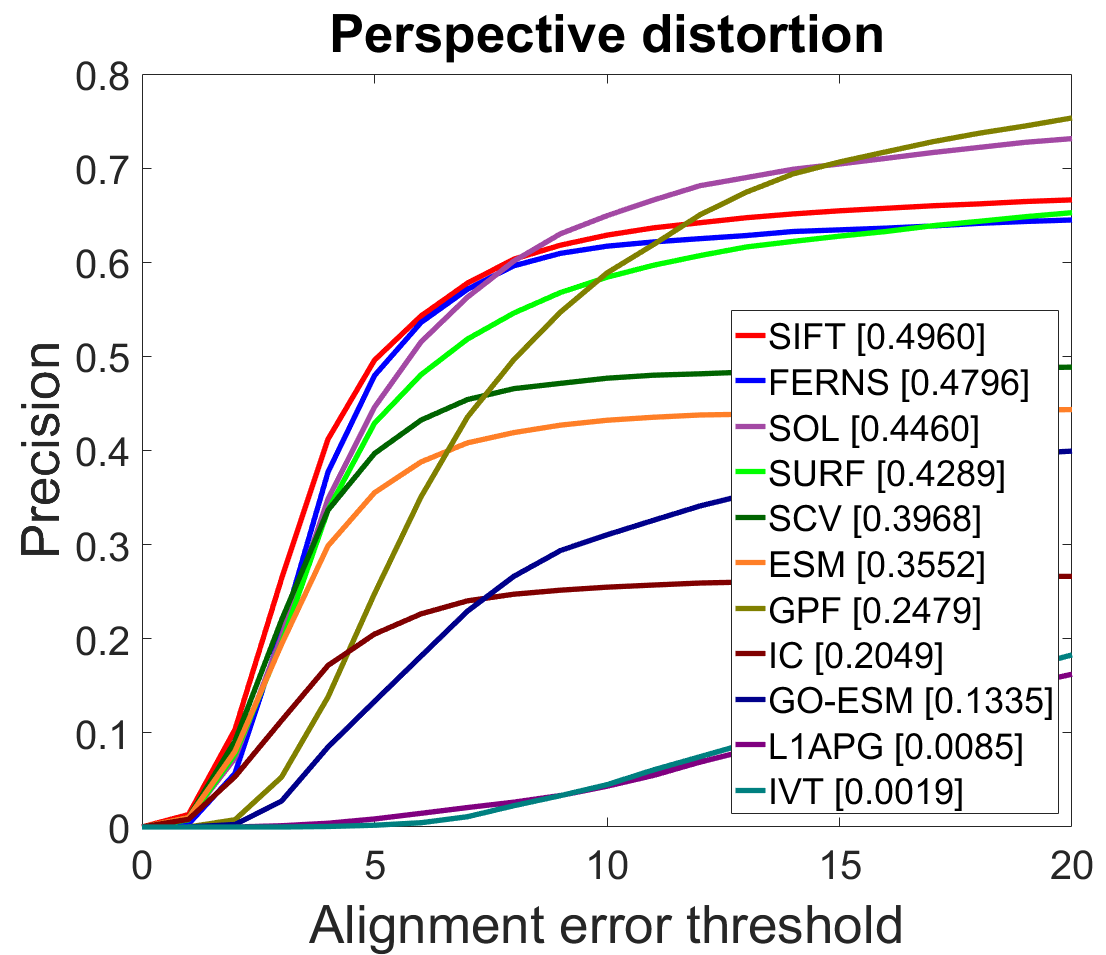

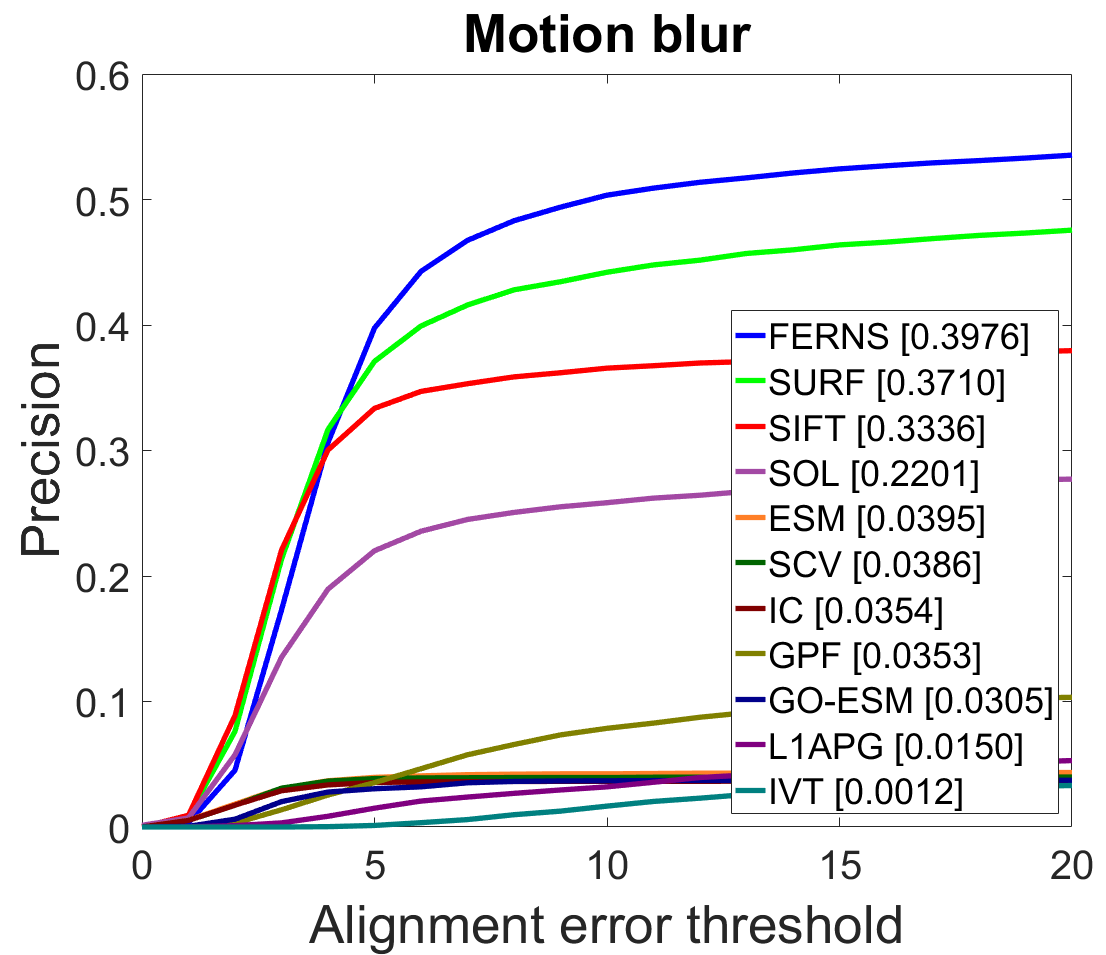

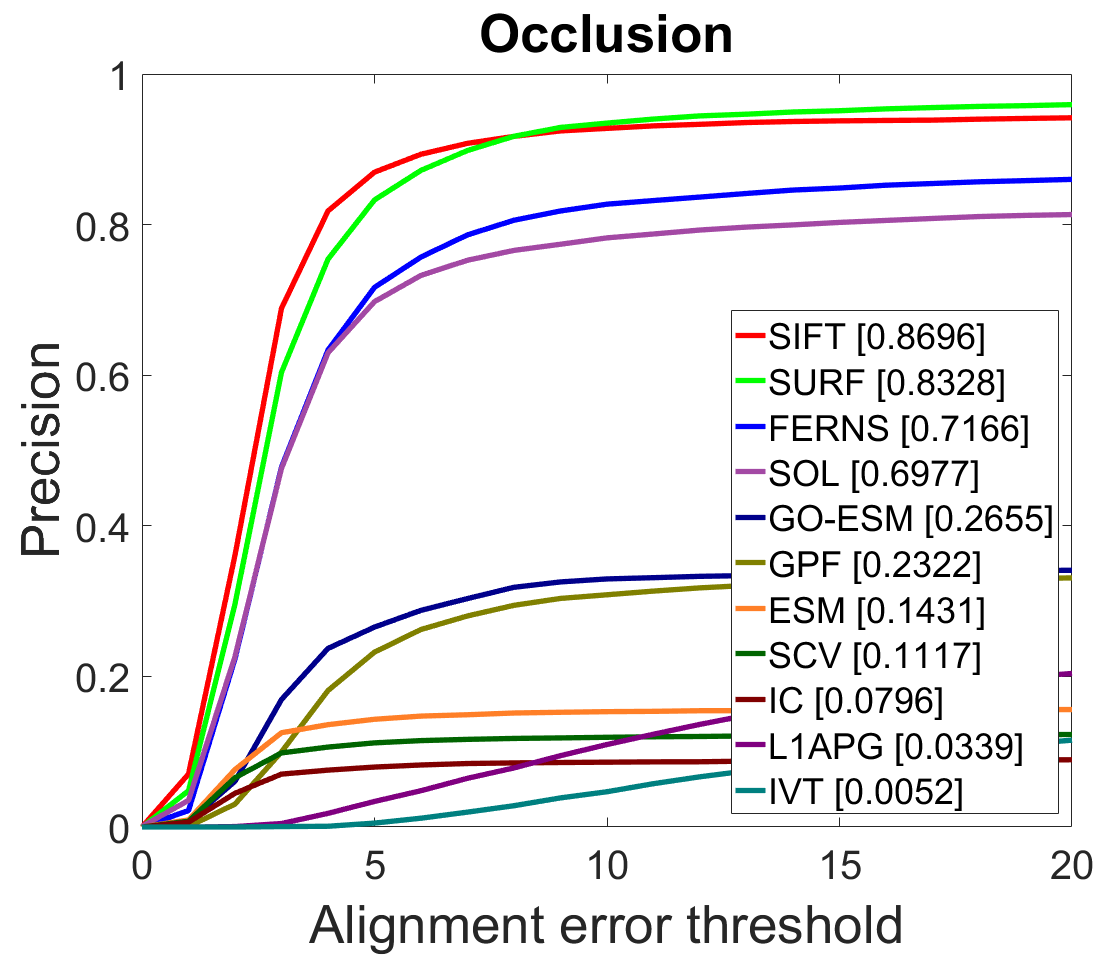

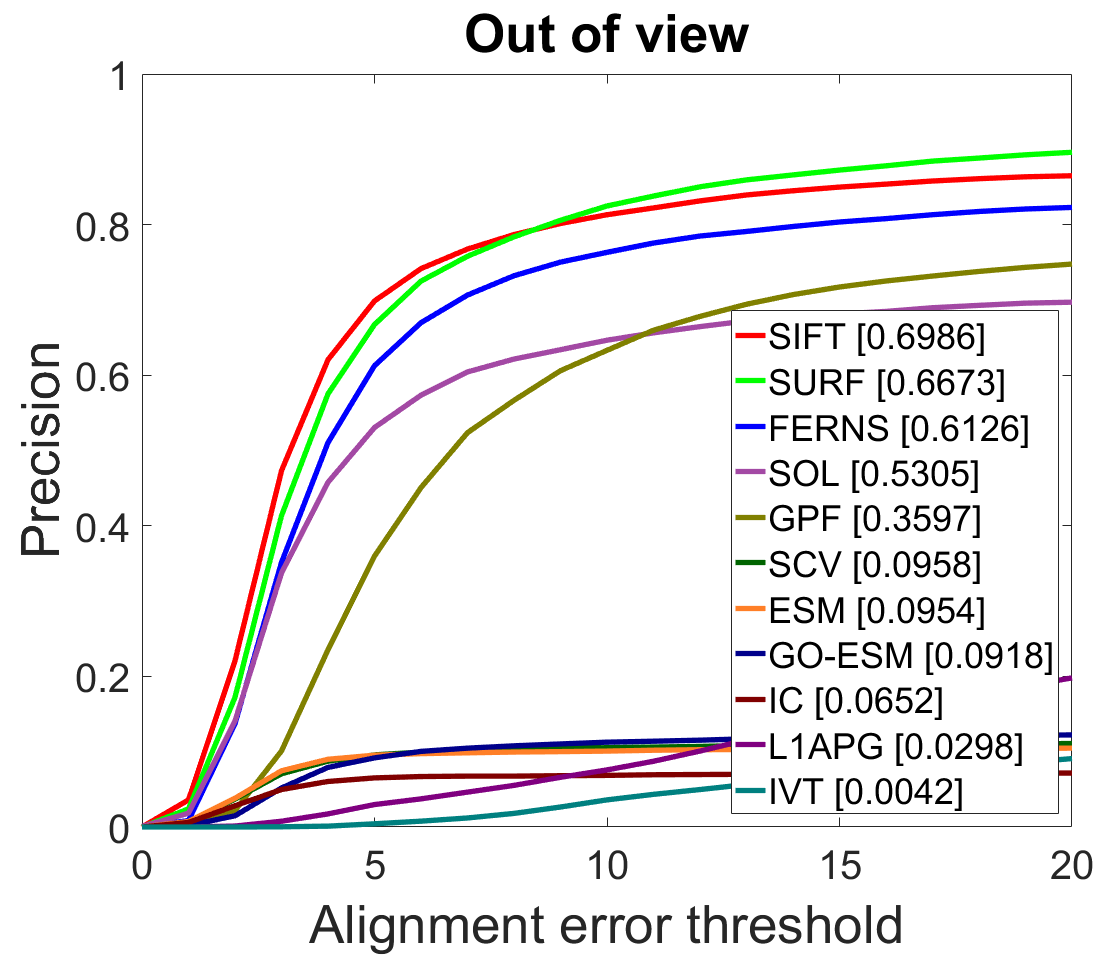

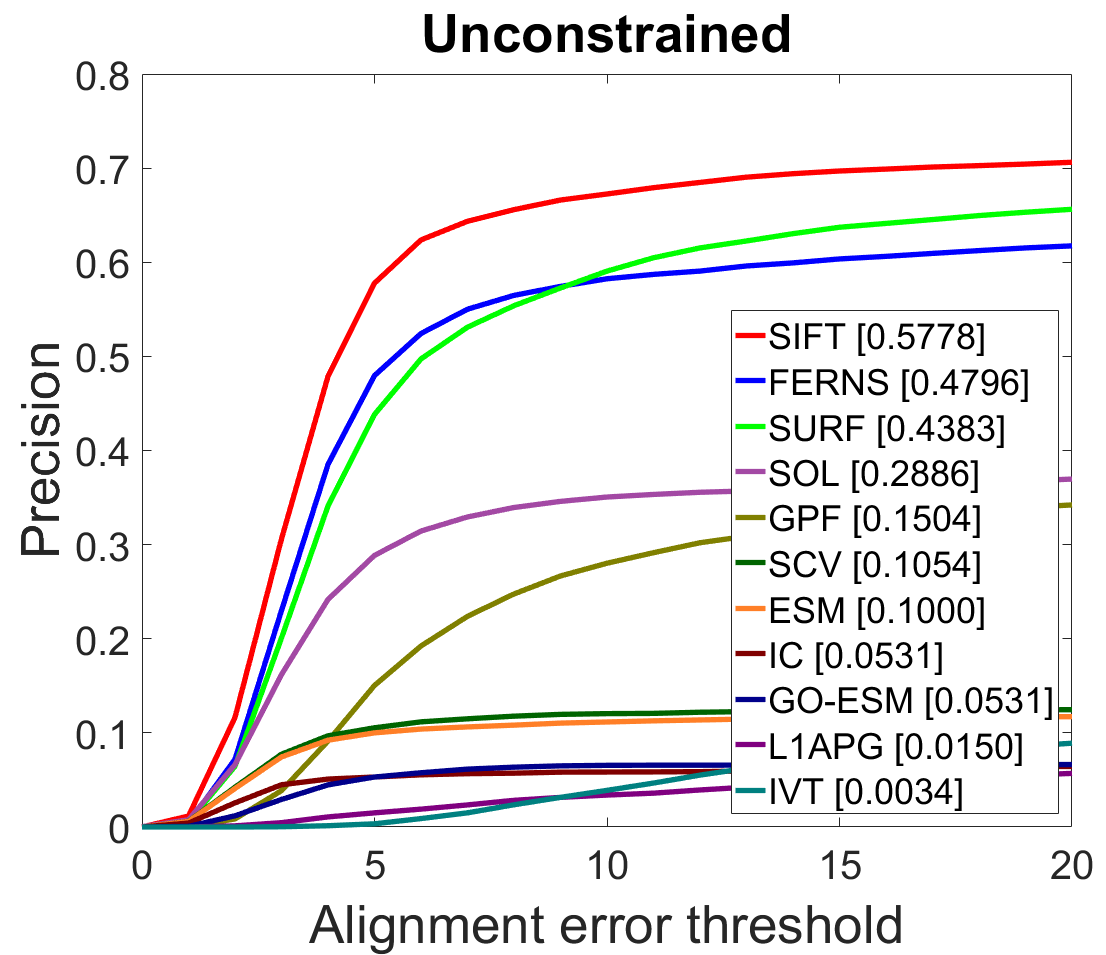

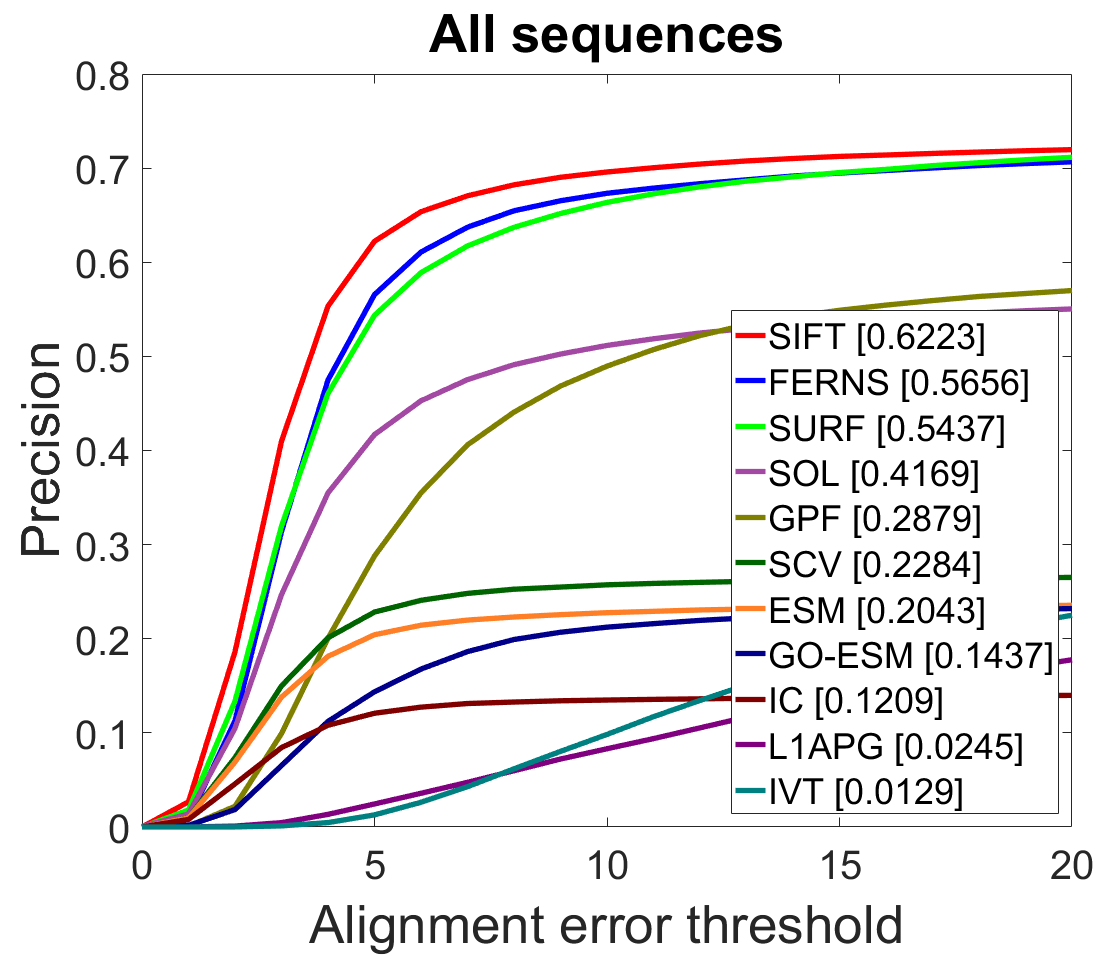

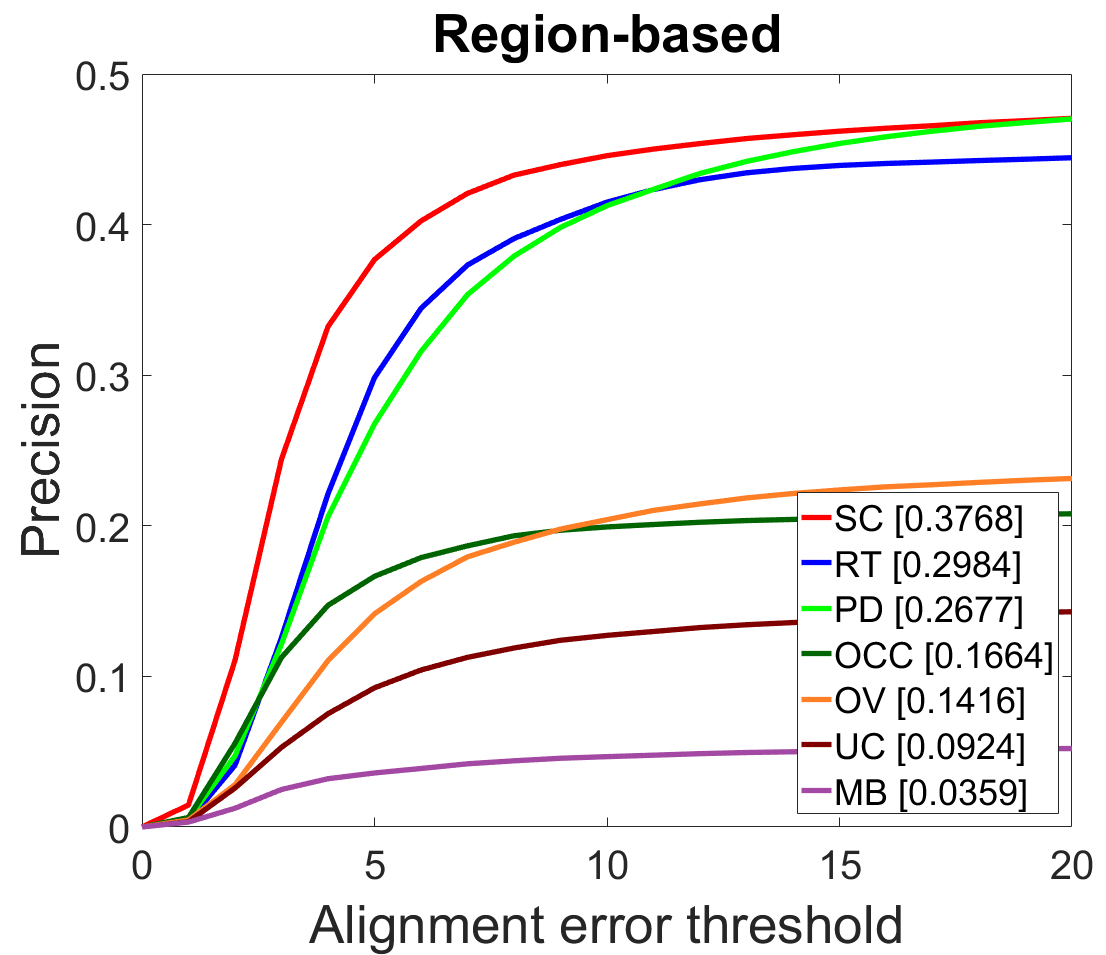

Fig. 1 Comparison of evaluated trackers using precision plots. The precision at the threshold tp=5 is used as a representative score.

|

|

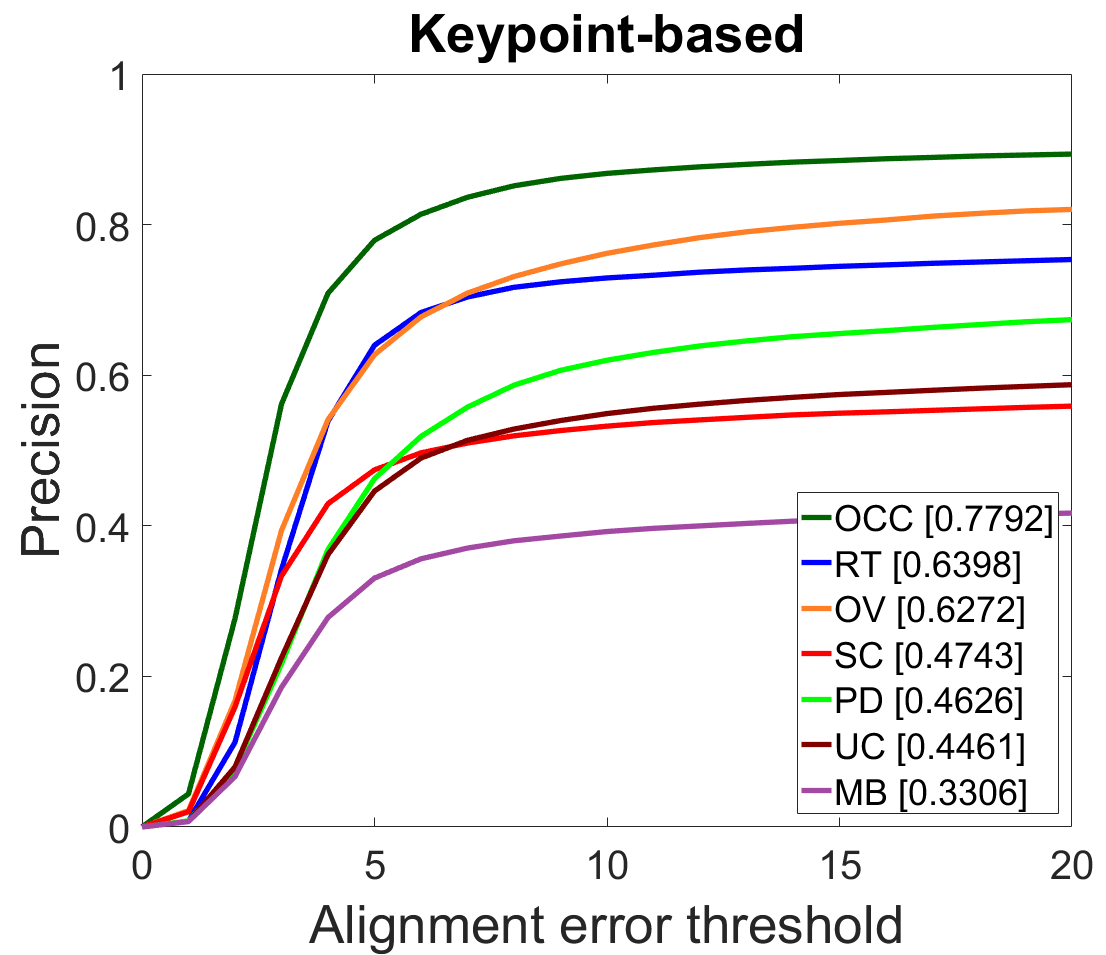

Fig. 2 The overall performance of trackers in two groups for different challenging factors. For each group, the overall performance is calculated by averaging the performances of trackers within this group. The precision at the threshold tp=5 is used.

| Tracker | Reference |

| ESM | S. Benhimane and E. Malis, "Real-time image-based tracking of planes using efficient second-order minimization," IROS, 2004. |

| FERNS | M. Ozuysal, M. Calonder, V. Lepetit, and P. Fua, "Fast keypoint recognition using random ferns," PAMI, 2010. |

| GO-ESM | L. Chen, F. Zhou, Y. Shen, X. Tian, H. Ling, and Y. Chen, "Illumination insensitive efficient second-order minimization for planar object tracking," ICRA, 2017 |

| GPF | J. Kwon, H. S. Lee, F. C. Park, and K. M. Lee, "A geometric particle filter for template-based visual tracking," PAMI 2014 |

| IC | S. Baker and I. Matthews, "Lucas-kanade 20 years on: A unifying framework," IJCV 2004 |

| IVT | D. A. Ross, J. Lim, R.-S. Lin, and M.-H. Yang, "Incremental learning for robust visual tracking," IJCV 2008 |

| L1APG | C. Bao, Y. Wu, H. Ling, and H. Ji, "Real time robust l1 tracker using accelerated proximal gradient approach," CVPR 2012 |

| SCV | R. Richa, R. Sznitman, R. Taylor, and G. Hager, “Visual tracking using the sum of conditional variance,” IROS, 2011. |

| SIFT | D. G. Lowe, "Distinctive image features from scale-invariant keypoints," IJCV 2004 |

| SOL | S. Hare, A. Saffari, and P. H. Torr, “Efficient online structured output learning for keypoint-based object tracking,” CVPR, 2012. |

| SURF | H. Bay, A. Ess, T. Tuytelaars, and L. Van Gool, "Speeded-up robust features (surf)," CVIU 2008 |