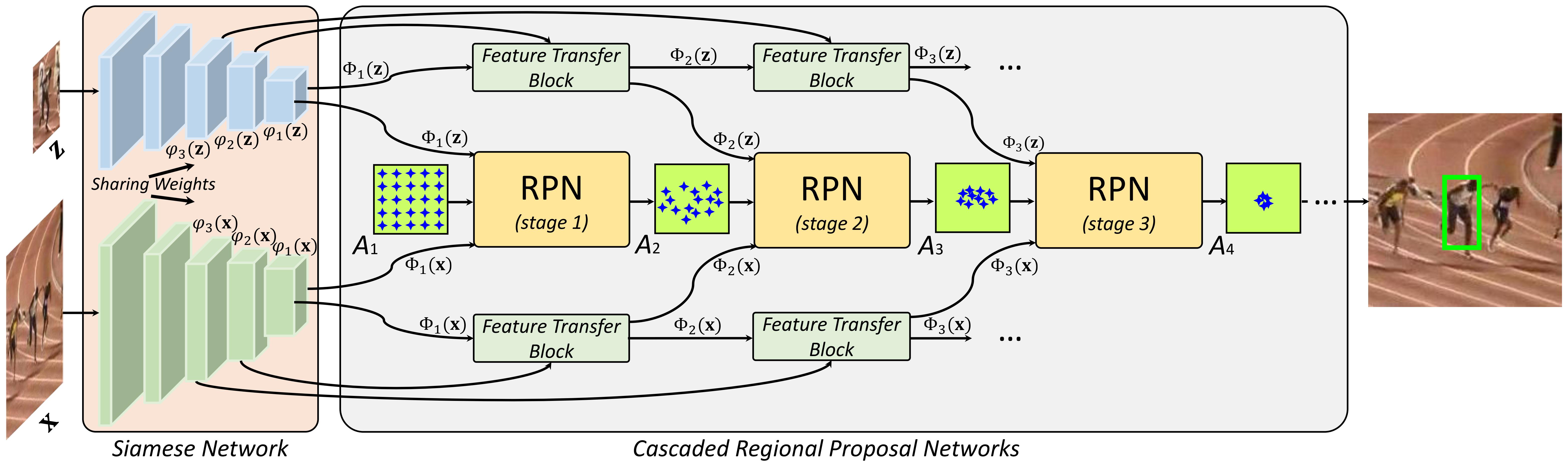

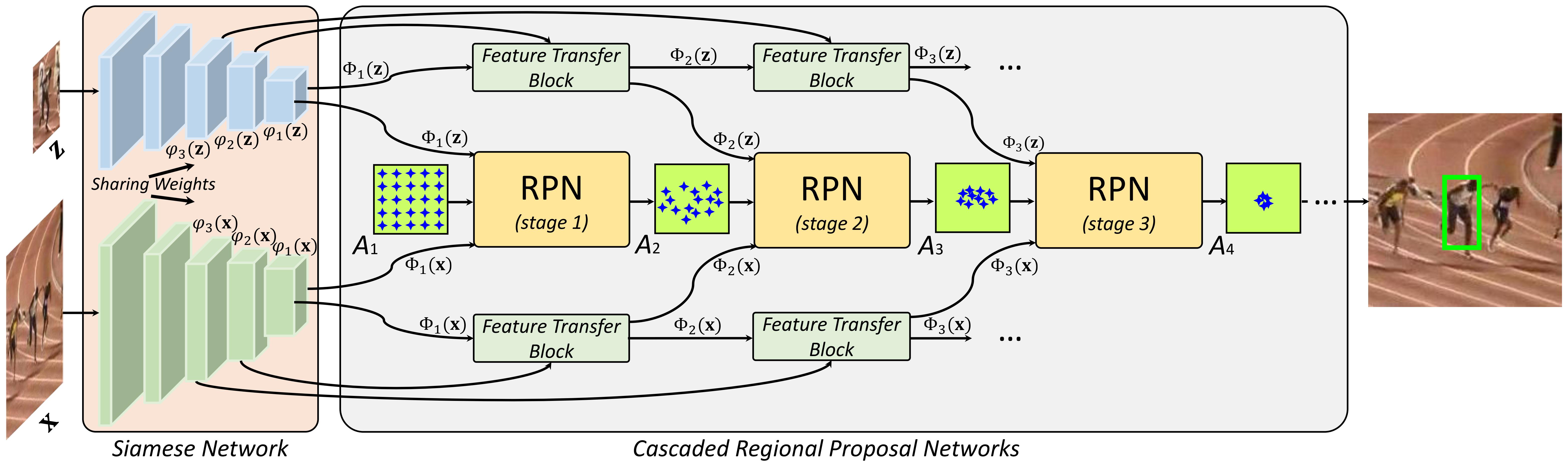

Figure 1. Illustration of the proposed C-RPN that consists of a sequence of RPNs cascading from high-level to low-level layers in the Siamese network.

Heng Fan and Haibin Ling

Department of Computer and Information Sciences, Temple University

Figure 1. Illustration of the proposed C-RPN that consists of a sequence of RPNs cascading from high-level to low-level layers in the Siamese network.

Recently, the region proposal networks (RPN) have been combined with the Siamese network for tracking, and shown excellent accuracy with high efficiency. Nevertheless, previously proposed one-stage Siamese-RPN trackers degenerate in presence of similar distractors and large scale variation. Addressing these issues, we propose a multi-stage tracking framework, Siamese Cascaded RPN (C-RPN), which consists of a sequence of RPNs cascaded from deep high-level to shallow low-level layers in a Siamese network. Compared to previous solutions, C-RPN has several advantages: (1) Each RPN is trained using the outputs of RPN in the previous stage. Such process stimulates hard negative sampling, resulting in more balanced training samples. Consequently, the RPNs are sequentially more discriminative in distinguishing difficult background (i.e., similar distractors). (2) Multi-level features are fully leveraged through a novel feature transfer block (FTB) for each RPN, further improving the discriminability of C-RPN using both high-level semantic and low-level spatial information. (3) With multiple steps of regressions, C-RPN progressively refines the location and shape of the target in each RPN with adjusted anchor boxes in the previous stage, which makes localization more accurate. C-RPN is trained end-to-end with the multi-task loss function. In inference, C-RPN is deployed as it is, without any temporal adaption, for real-time tracking. In extensive experiments on OTB-2013, OTB-2015, VOT-2016, VOT-2017, LaSOT and TrackingNet, C-RPN consistently achieves state-of-the-art results and runs in real-time.

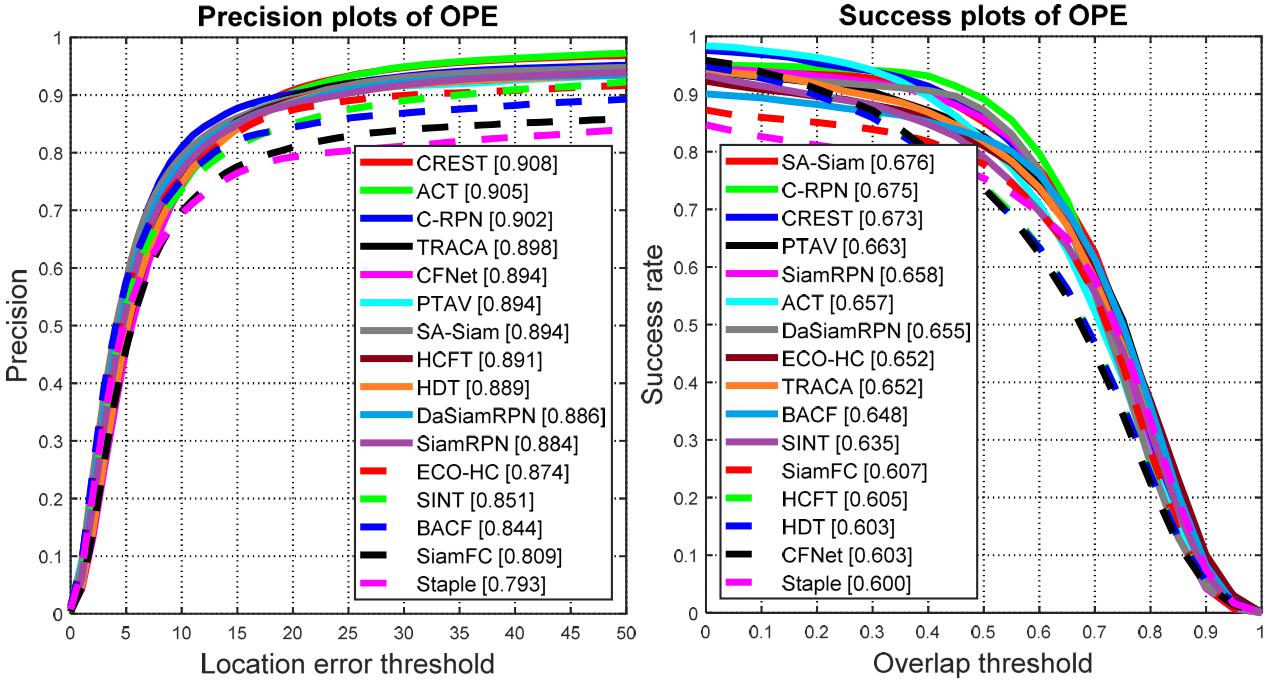

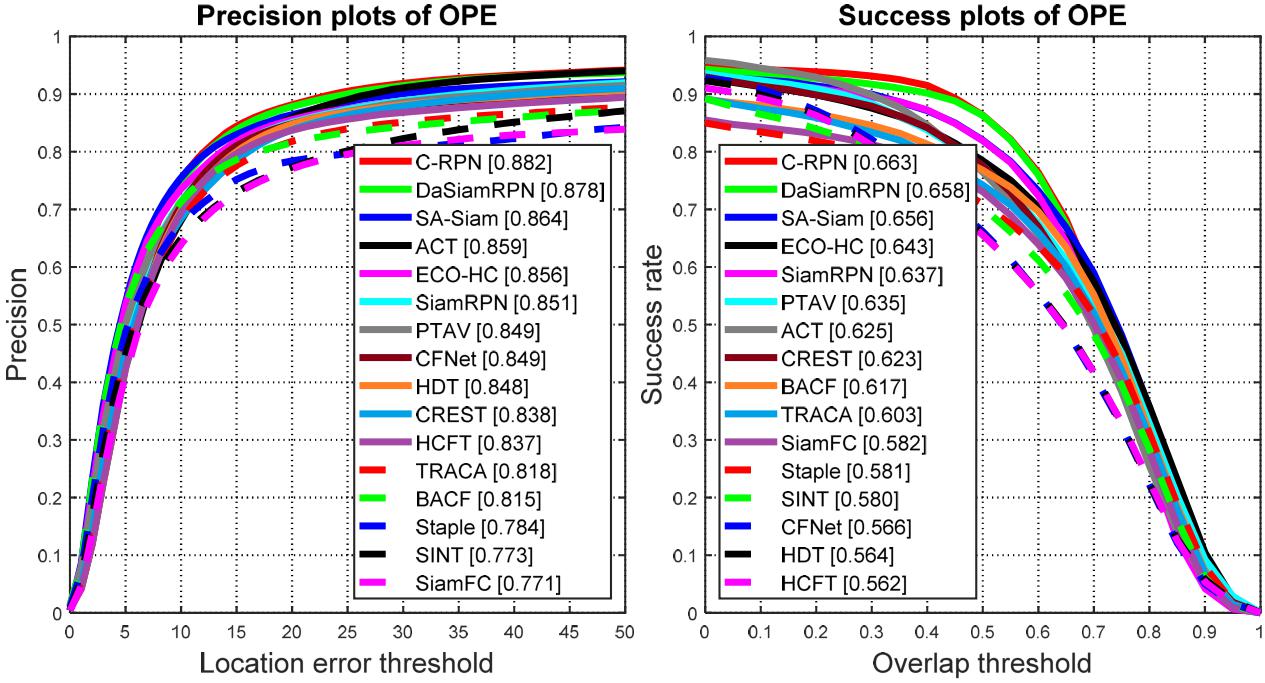

Comparison on OTB2013 |

Comparison on OTB2015 |

Figure 2. The precision plots and success plots of OPE for the proposed tracker and other state-of-the-art methods on OTB2013 and OTB2015.

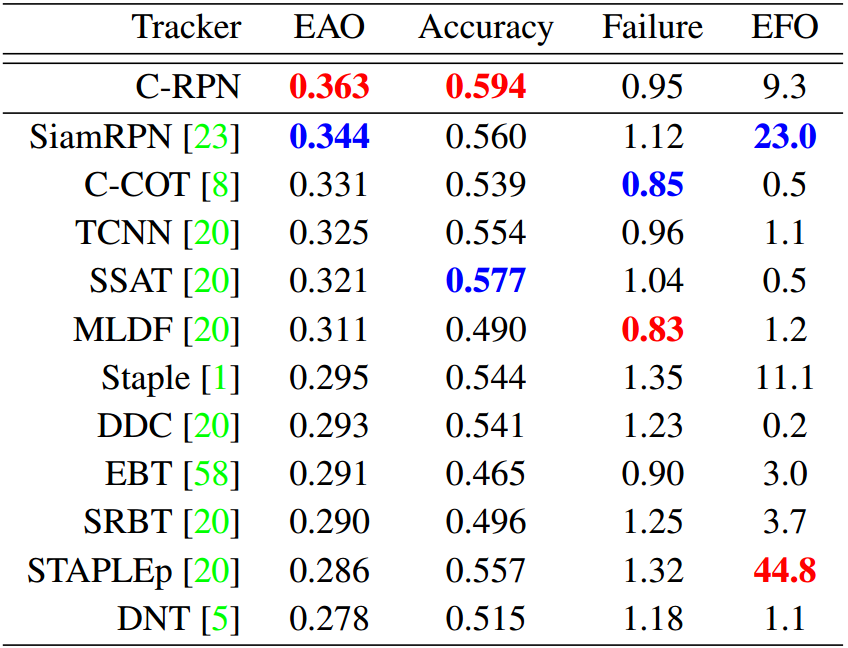

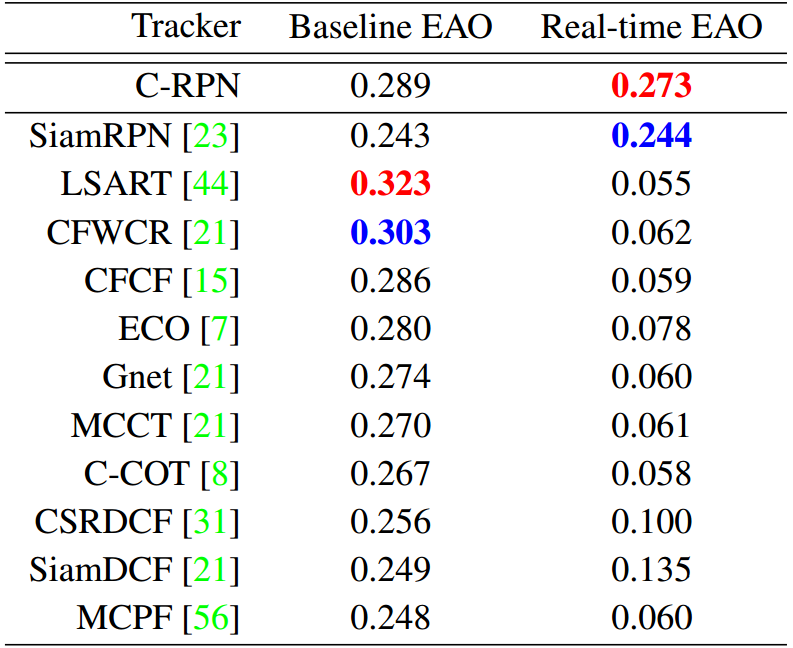

Comparison on VOT2016 |

Comparison on VOT2017 |

Figure 3. The results of the proposed tracker and other state-of-the-art methods on VOT2016 and VOT2017.

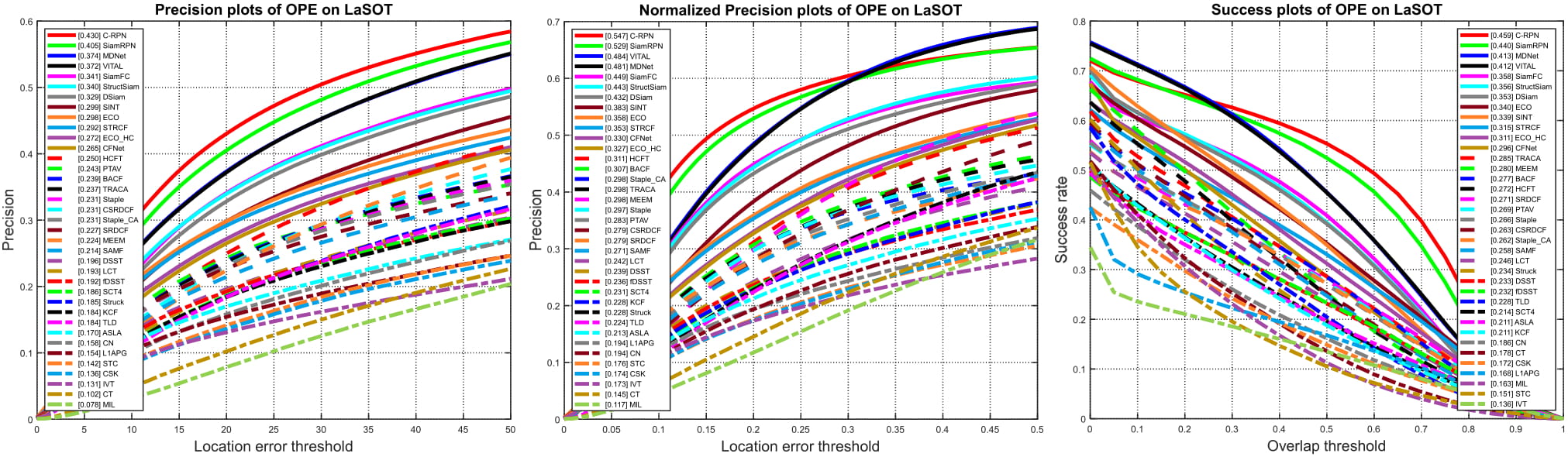

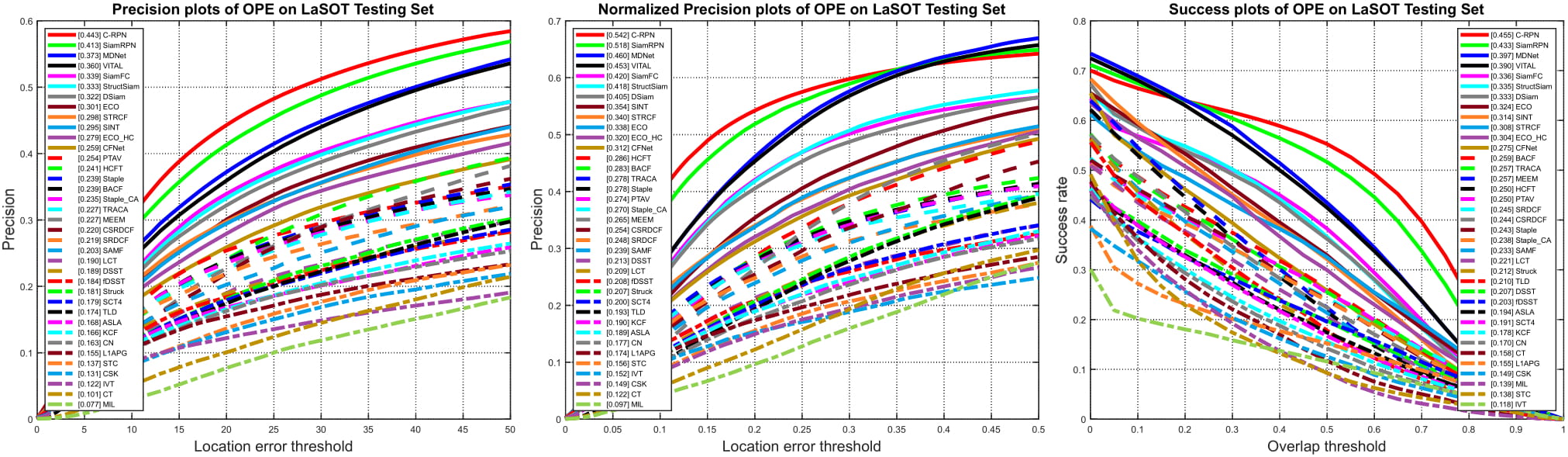

Comparison on LaSOT under protocol I |

Comparison on LaSOT under protocol II |

Figure 4. The results of the proposed tracker and other state-of-the-art methods on LaSOT.

Comparison with top ten trackers on TrackingNet |

Figure 5. The results of the proposed tracker and other state-of-the-art methods on TrackingNet. More can be found at the lead board.

Heng Fan and Haibin Ling. Siamese Cascaded Region Proposal Networks for Real-Time Visual Tracking. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2019. [Paper][Supplementary Material][Code][Tracking Results]

[1] L. Bertinetto, J. Valmadre, J. F. Henriques, A. Vedaldi, and P. H. Torr. Fully-convolutional siamese networks for object tracking. In ECCVW , 2016.

[2] B. Li, J. Yan, W. Wu, Z. Zhu, and X. Hu. High performance visual tracking with siamese region proposal network. In CVPR , 2018.

[3] Z. Cai and N. Vasconcelos. Cascade r-cnn: Delving into high quality object detection. In CVPR , 2018.

[4] S. Zhang, L. Wen, X. Bian, Z. Lei, and S. Z. Li. Single-shot refinement neural network for object detection. In CVPR, 2018

[5] S. Ren, K. He, R. Girshick, and J. Sun. Faster r-cnn: Towards real-time object detection with region proposal networks In NIPS , 2015.

This software is free for use in research projects. If you publish results obtained using this software, please cite our paper. If you have any question, please feel free to contact Heng Fan (hengfan AT temple.edu). NOTE: by click the above downloading link, you have agreed that:

If you have any questions, please contact Heng Fan by hengfan AT temple.edu.