Contextual Attention for Hand Detection in the Wild

Supreeth Narasimhaswamy†,1, Zhengwei Wei†,1, Yang Wang1, Justin Zhang2, Minh Hoai1,3

†Joint First Authors, 1Stony Brook University, 2Caltech 3VinAI Research

Abstract

We present Hand-CNN, a novel convolutional network architecture for detecting hand masks and predicting hand orientations in unconstrained images. Hand-CNN extends MaskRCNN with a novel attention mechanism to incorporate contextual cues in the detection process. This attention mechanism can be implemented as an efficient network module that captures non-local dependencies between features. This network module can be inserted at different stages of an object detection network, and the entire detector can be trained end-to-end.

We also introduce a large-scale annotated hand dataset containing hands in unconstrained images for training and evaluation. We show that Hand-CNN outperforms existing methods on several datasets, including our hand detection benchmark and the publicly available PASCAL VOC human layout challenge. We also conduct ablation studies on hand detection to show the effectiveness of the proposed contextual attention module.

Qualitative Results

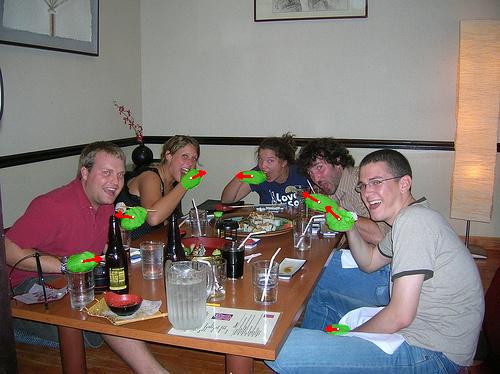

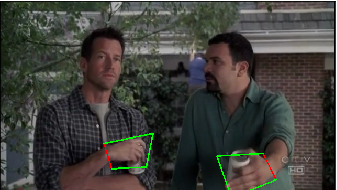

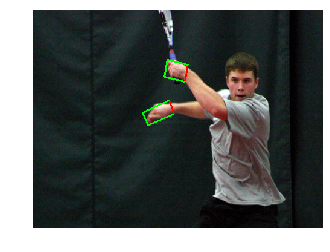

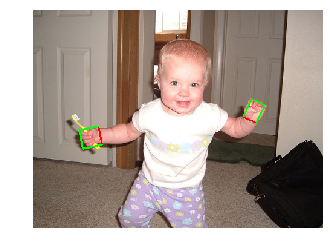

Figure 1: Some qualitative results of Hand-CNN on images.

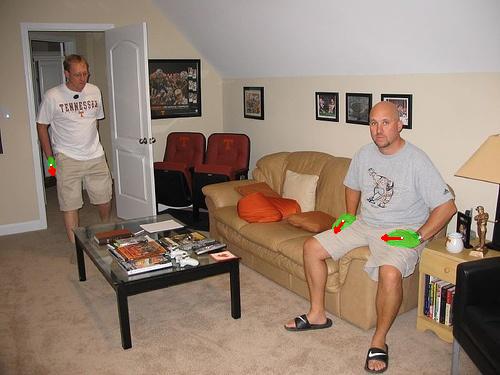

Figure 2: Some more results of Hand-CNN on images and videos.

Demo

Please find an online demo for this project at demo. The demo allows an user to upload an image and displays the corresponding output image color splashed with detected hand masks. Red arrows indicating the orientation of the hands are also displayed for detected hands.

Datasets

As a part of this project we release two datasets, TV-Hand and Oxford-Hand. The TV-Hand dataset contains hand annotations for 9.5K image frames extracted from the ActionThread dataset. The COCO-Hand dataset contains annotations for 25K images of the Microsoft's COCO dataset. The datasets can be dowloaded at TV-Hand (2.3 GB) and COCO-Hand (1.2 GB) .

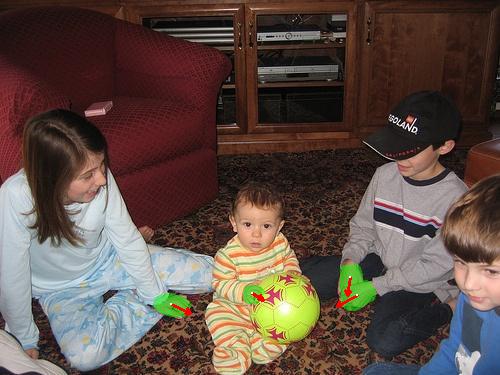

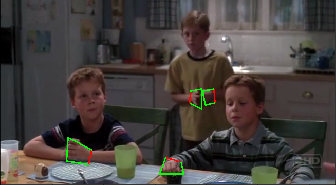

Figure 3: Some images and annotations from the TV-Hand data.

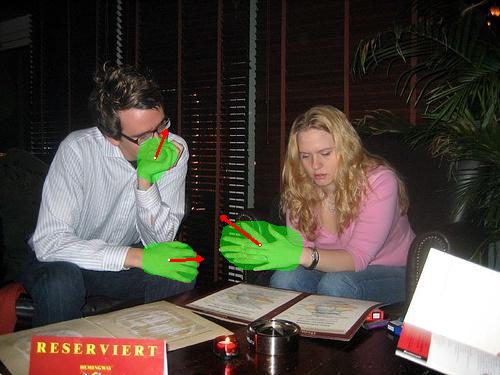

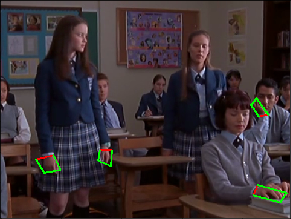

Figure 4: Some images and annotations from the COCO-Hand data.

Code

Please see the code for a Keras implementation of Hand-CNN.

Paper

Contextual Attention for Hand Detection in the Wild. S. Narasimhaswamy, Z. Wei, Y. Wang, J. Zhang, and M. Hoai, IEEE International Conference on Computer Vision, ICCV 2019.

If you find this work useful in your research please cite our work using this BibTeX.