Inverse Reinforcement Learning for Human Attention Modeling

Overview

The process by which people shift their attention from one thing to another touches upon everything that we think and do, and as such has widespread importance in fields ranging from basic research and education to applications in industry and national defense. This research develops a computational model for predicting these human shifts in visual attention. Prediction is understanding, and with this model we will achieve a greater understanding of this core human cognitive process. More tangibly, prediction enables applications to anticipate where attention will shift in response to seeing specific imagery. This in turn would usher in 1) a new generation of human-computer interactive systems, ones capable of interacting with users at the level of their attention movements, and 2) novel ways to annotate and index visual content based on attentional importance or interest.

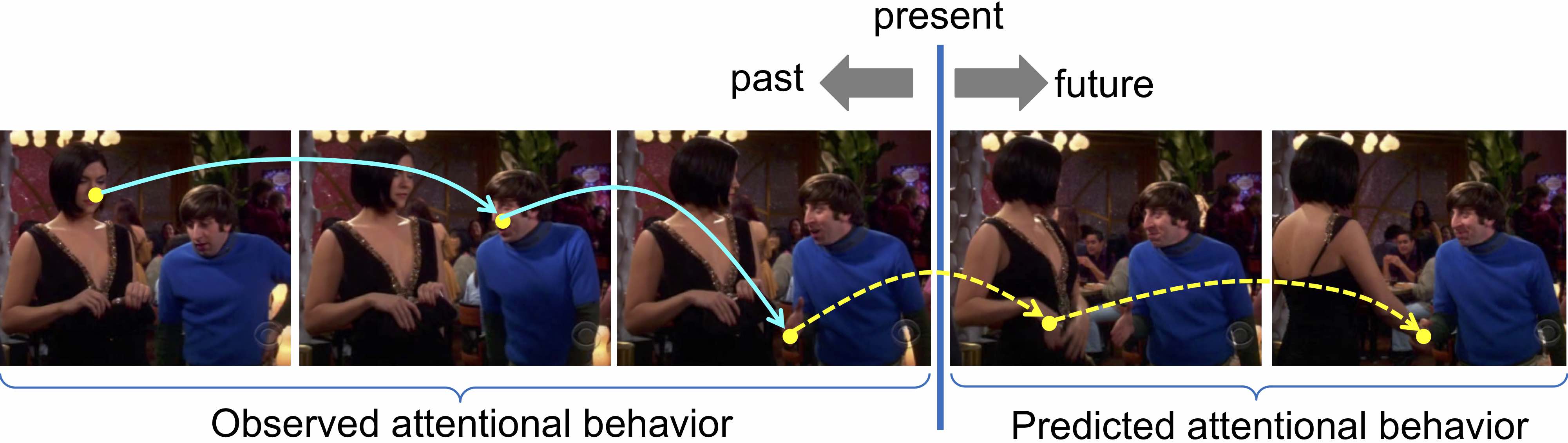

This project investigates a synergistic computational and behavioral approach for modeling the movements of human attention. This approach is based on an assumption that attentional engagement on an image (or video frame) depends on both the pixels that are being viewed and the viewer’s previous state. Based on this assumption, visual attention is posed as a Markov decision process, and inverse reinforcement learning is used to learn a reward function to associate specific spatio-temporal regions in an image, corresponding to the pixels at a viewer’s momentary locus of attention, with a reward. Under this novel approach, the attention mechanism is treated as an agent whose action is to select a location in an image or image frame that will maximize its total reward. This model is being evaluated against a behavioral ground truth consisting of the eye movements that people make as they view images and video in the context of free viewing and visual search tasks.

People

Minh Hoai Nguyen, Principal Investigator

Dimitris Samaras, Co-PI

Greg Zelinsky, Co-PI

Lihan Huang, CS PhD Student

Zhibo Yang, CS PhD Student

Seoyoung Ahn, Psychology PhD Student

Yupei Chen, Psychology PhD Student

Publications

Benchmarking Gaze Prediction for Categorical Visual Search.

G. Zelinsky, Z. Yang, L. Huang, Y. Chen, S. Ahn, Z. Wei, H. Adeli, D. Samaras, M. Hoai (2019)

CVPR Workshop - Mutual Benefits of Cognitive and Computer Vision.

Paper BibTex

Funding Sources

Nation Science Foundation Award No. 1763981. RI: Medium: Inverse Reinforcement Learning for Human Attention Modeling

Copyright notice

The documents contained in these directories are included by the contributing authors as a means to ensure timely dissemination of scholarly and technical work on a non-commercial basis. Copyright and all rights therein are maintained by the authors or by other copyright holders, notwithstanding that they have offered their works here electronically. It is understood that all persons copying this information will adhere to the terms and constraints invoked by each author's copyright. These works may not be reposted without the explicit permission of the copyright holder.