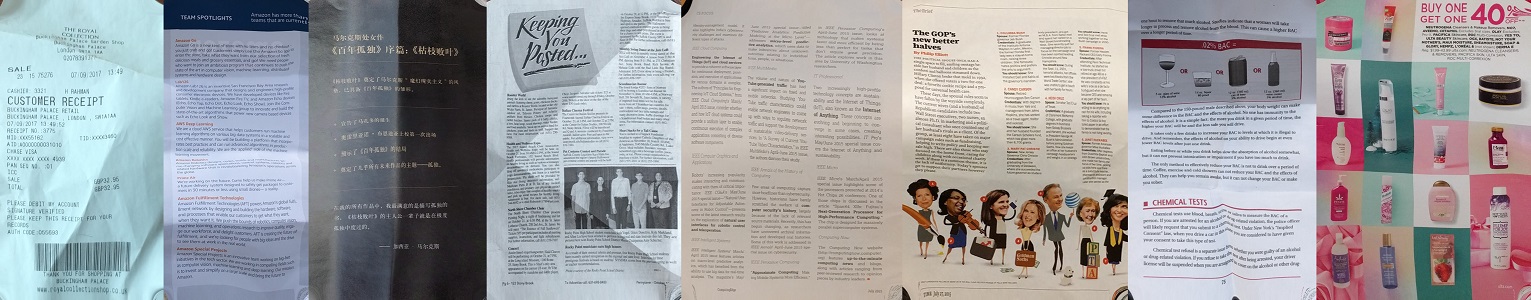

Capturing document images is a common way for digitizing and recording physical documents due to the ubiquitousness of

mobile cameras. To make text recognition easier, it is often desirable to digitally flatten a document image when

the physical document sheet is folded or curved. In this paper, we develop the first learning-based method to achieve

this goal. We propose a stacked U-Net with intermediate supervision to directly predict the forward mapping from

a distorted image to its rectified version. Because large-scale real-world data with ground truth deformation is

difficult to obtain, we create a synthetic dataset with approximately 100 thousand images by warping non-distorted

document images. The network is trained on this dataset with various data augmentations to improve its generalization

ability. We further create a comprehensive benchmark that covers various real-world conditions. We evaluate the proposed

model quantitatively and qualitatively on the proposed benchmark, and compare it with previous nonlearning-based

methods.

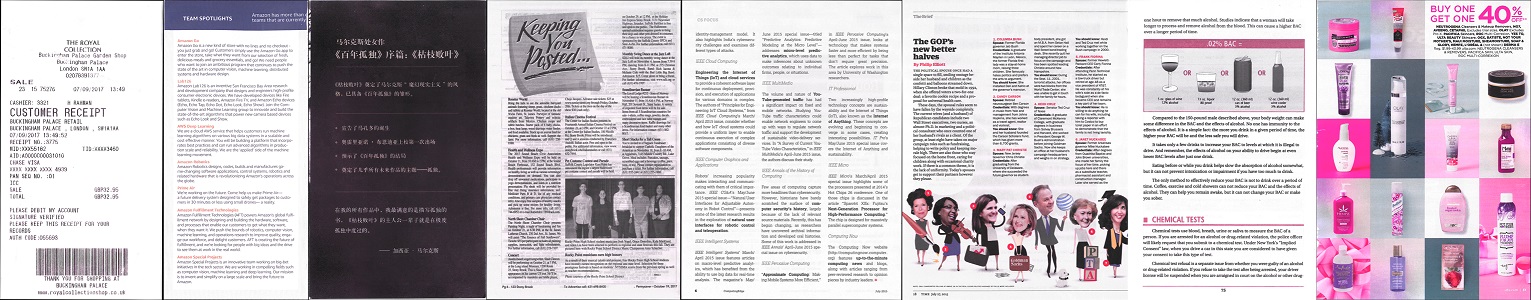

We provide the benchmark dataset. It contains 3 parts: i) original photos, ii) document centered cropped images (used in

our paper), and iii) scans from a flatbed scanner.

Along with the benchmark, we also provide the evaluate code. We use two evaluation

schemes in our experiments: Multi-Scale Structural

Similarity (MS-SSIM) and Local Distortion (LD).

If using the dataset or code, please cite:

DocUNet: Document Image Unwarping via A Stacked U-Net, Ke Ma, Zhixin Shu, Xue Bai, Jue Wang, Dimitris Samaras.

Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018 [

BibTex ]

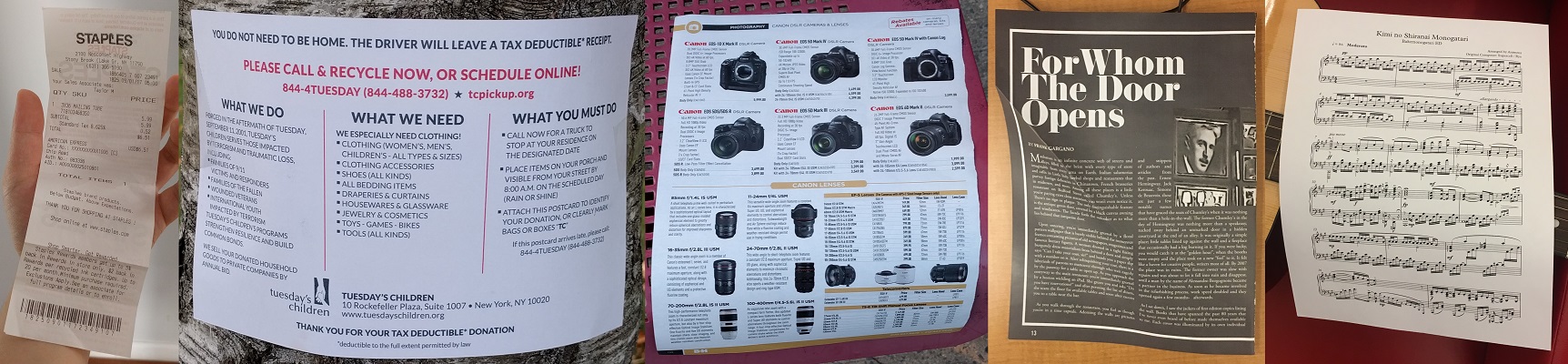

The evaluation code computes both MS-SSIM and local distortion (LD). SSIM uses Matlab Image Processing toolbox.

The weights for multiple scale is inherited from:

[1] Wang, Zhou, Eero P. Simoncelli, and Alan C. Bovik. "Multiscale structural similarity for image quality assessment." In Asilomar Conference on Signals, Systems and Computers, 2003.

LD utilizes the paper:

[2] Liu, Ce, Jenny Yuen, and Antonio Torralba. "Sift flow: Dense correspondence

across scenes and its applications." In PAMI, 2010.

and its imlementation (included in the evluation code package):

Link

This work started when Ke Ma was an intern at Megvii Inc. This work was supported by a gift from Adobe, Partner University

Fund, and the SUNY2020 Infrastructure Transportation Security Center.

If you have any question, please send email to kemmaATcsDOTstonybrookDOTedu.