This work bridges computational fairness, causal interpretability, and Responsible

AI communication. We create interactive tools that let users visualize, question,

and reconfigure both data-driven models and the social narratives they inform.

Since bias and fairness are inherently subjective and mean different things

to different people, the systems developed in this research feature well-designed

interactive visual interfaces that put the human in the loop—empowering

users to take an active role in understanding and mitigating bias, misinformation,

and misconceptions that arise in algorithmic decisions, causal reasoning, visual

communication, and public discourse on polarizing issues such as climate change,

health, and social policy. Increasingly, these systems also leverage large language

models to simulate diverse personas, enabling the study of how reasoning, messaging,

and belief formation differ across social, psychological, and cultural contexts.

|

Leveraging

Large Language Models for Personalized Public Messaging

A. Kumar Das, C. Xiong Bearfield, K. Mueller

ACM CHI Conference on Human Factors in Computing Systems Late Breaking Work

Tokyo, Japan, May 2025. |

|

|

|

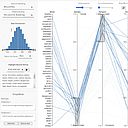

FairPlay:

A Collaborative Approach to Mitigate Bias in Datasets for Improved AI Fairness

T. Behzad, M. Singh, A. Ripa, K. Mueller

Proceedings of the ACM on Human-Computer Interaction (CSCW)

9(2):1-30, 2025 |

|

|

|

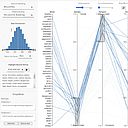

Belief

Miner: A Methodology for Discovering Causal Beliefs and Causal Illusions

from General Populations

S. Salim, N. Hoque, K. Mueller

Proceedings of the ACM on Human-Computer Interaction, 8 (CSCW 1)

1-37, 2024 |

|

|

|

D-BIAS:

A Causality-Based Human-in-the-Loop System for Tackling Algorithmic Bias

B. Ghai, K. Mueller

IEEE Trans. on Visualization and Computer Graphics (Special Issue IEEE VIS

2022)

29(1):473-482, 2023 |

|

|

|

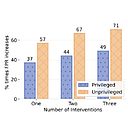

Cascaded

Debiasing: Studying the Cumulative Effect of Multiple Fairness-Enhancing

Interventions

B. Ghai, M. Mishra, K. Mueller

ACM Conference on Information and Knowledge Management (CIKM)

Atlanta, GA, October, 2022 |

|

|

|

WordBias:

An Interactive Visual Tool for Discovering Intersectional Biases Encoded

in Word Embeddings

B. Ghai, Md. N. Hoque, K. Mueller

ACM CHI Late Breaking Work

Virtual, May 8-13, 2021 |

|

|

|

Measuring

Social Biases of Crowd Workers using Counterfactual Queries

B Ghai, QV Liao, Y Zhang, K Mueller

Fair & Responsible AI Workshop (co-located with CHI 2020)

Virtual, April 2020 |

|

|

|

Toward

Interactively Balancing the Screen Time of Actors Based on Observable Phenotypic

Traits in Live Telecast

Md. N Hoque, S. Billah, N. Saquib, K. Mueller

ACM Conference on Computer-Supported Cooperative Work and Social Computing

(CSCW)

Virtual, October 17-21, 2020 |

|

|