Object Class Recognition and Human Vision Guided Search

We are investigating various methods for object recognition, especially object class recognition. We already successfully used heterogeneous features and AdaBoost learning method to achieve state-of-the-art performance for object class recognition. We also combined this object recognition framework with a biologically inspired eye model, thus able to predict a sequence of eye movements simulating human eye's movements in visual search tasks. Through our experiments, the model behavior matched surprisingly well with the human behavioral data collected by the Eyetracker. We extended our model for categorical search, the task of finding and recognizing categorically defined targets. We have shown that visual features discriminating a target category from non-targets can be learned and used to guide eye movements during categorical search.

- Selected publications:

Modelling eye movements in a categorical search task, Gregory J. Zelinsky, Hossein Adeli, Yifan Peng, Dimitris Samaras. Philosophical Transactions of the Royal Society. B: Biological Science 2013 368, 20130058, September 2013

Modelling eye movements in a categorical search task, Gregory J. Zelinsky, Hossein Adeli, Yifan Peng, Dimitris Samaras. Philosophical Transactions of the Royal Society. B: Biological Science 2013 368, 20130058, September 2013 Modeling Guidance and Recognition in Categorical Search: Bridging Human and Computer Object Detection, Gregory Zelinsky, Yifan Peng, Alexander Berg, Dimitris Samaras, Visual Science Society 2012

Modeling Guidance and Recognition in Categorical Search: Bridging Human and Computer Object Detection, Gregory Zelinsky, Yifan Peng, Alexander Berg, Dimitris Samaras, Visual Science Society 2012

- Old publications:

A Computational Model of Eye Movements During Object Class Detection, Wei Zhang, Hyejin Yang, Dimitris Samaras, Greg Zelinsky. In Advances in Neural Information Processing (NIPS) 18, 2005, Vancouver, Canada, pp. 1609-1616.

A Computational Model of Eye Movements During Object Class Detection, Wei Zhang, Hyejin Yang, Dimitris Samaras, Greg Zelinsky. In Advances in Neural Information Processing (NIPS) 18, 2005, Vancouver, Canada, pp. 1609-1616. The Role of Top-down and Bottom-up Processes in Guiding Eye Movements During Visual Search, Greg Zelinsky, Wei Zhang, Bing Yu, Xin Chen, Dimitris Samaras, In Advances in Neural Information Processing (NIPS) 2005, Vancouver, Canada, pp. 1569-1576.

The Role of Top-down and Bottom-up Processes in Guiding Eye Movements During Visual Search, Greg Zelinsky, Wei Zhang, Bing Yu, Xin Chen, Dimitris Samaras, In Advances in Neural Information Processing (NIPS) 2005, Vancouver, Canada, pp. 1569-1576. Object class recognition using multiple layer boosting with multiple features, Wei Zhang, Bing Yu, Greg Zelinsky, Dimitris Samaras. In CVPR, pp II:323-330, 2005.

Object class recognition using multiple layer boosting with multiple features, Wei Zhang, Bing Yu, Greg Zelinsky, Dimitris Samaras. In CVPR, pp II:323-330, 2005.

- Collaborators: Gregory Zelinsky (Stony Brook)

- Former students:

- Yifan Peng, Wei Zhang, Bing Yu

- Funding: NIMH, ARO

- Keywords: Object Recognition, AdaBoost, Human Vision, Computational Eye Model

Modelling eye movements in a categorical search task

[publications]

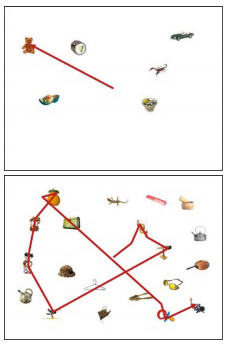

We introduce a model of eye movements during categorical search, the task of finding and recognizing categorically defined targets. It extends a previous model of eye movements during search (target acquisition model, TAM) by using distances from an support vector machine classification boundary to create probability maps indicating pixel-by-pixel evidence for the target category in search images. Other additions include functionality enabling target-absent searches, and a fixation-based blurring of the search images now based on a mapping between visual and collicular space. We tested this model on images from a previously conducted variable set-size (6/13/20) present/absent search experiment where participants searched for categorically defined teddy bear targets among random category distractors. The model not only captured target-present/absent set-size effects, but also accurately predicted for all conditions the numbers of fixations made prior to search judgements. It also predicted the percentages of first eye movements during search landing on targets, a conservative measure of search guidance. Effects of set size on false negative and false positive errors were also captured, but error rates in general were overestimated. We conclude that visual features discriminating a target category from non-targets can be learned and used to guide eye movements during categorical search.

Publications

Modelling eye movements in a categorical search task, Gregory J. Zelinsky, Hossein Adeli, Yifan Peng, Dimitris Samaras, Philosophical Transactions of the Royal Society. B: Biological Science 2013 368, 20130058, September 2013

Modelling eye movements in a categorical search task, Gregory J. Zelinsky, Hossein Adeli, Yifan Peng, Dimitris Samaras, Philosophical Transactions of the Royal Society. B: Biological Science 2013 368, 20130058, September 2013

[ BibTex ] Modeling Guidance and Recognition in Categorical Search: Bridging Human and Computer Object Detection Gregory Zelinsky, Yifan Peng, Alexander Berg, Dimitris Samaras., Visual Science Society 2012.

Modeling Guidance and Recognition in Categorical Search: Bridging Human and Computer Object Detection Gregory Zelinsky, Yifan Peng, Alexander Berg, Dimitris Samaras., Visual Science Society 2012.

A Computational Model of Eye Movements During Object Class Detection

[publications]

We present a computational model of human eye movements in an object class detection task. The model combines state-of-the-art computer vision object class detection methods (SIFT features trained using AdaBoost) with a biologically plausible model of human eye movement to produce a sequence of simulated fixations, culminating with the acquisition of a target. We validated the model by comparing its behavior to the behavior of human observers performing the identical object class detection task (looking for a teddy bear among visually complex nontarget objects). We found considerable agreement between the model and human data in multiple eye movement measures, including number of fixations, cumulative probability of fixating the target, and scanpath distance.

Publications

A Computational Model of Eye Movements During Object Class Detection, Wei Zhang, Hyejin Yang, Dimitris Samaras, Greg Zelinsky. In Advances in Neural Information Processing (NIPS) 18, 2005, Vancouver, Canada, pp. 1609-1616.

A Computational Model of Eye Movements During Object Class Detection, Wei Zhang, Hyejin Yang, Dimitris Samaras, Greg Zelinsky. In Advances in Neural Information Processing (NIPS) 18, 2005, Vancouver, Canada, pp. 1609-1616.

[ BibTex ]

The Role of Top-down and Bottom-up Processes in Guiding Eye Movements During Visual Search

[publications]

To investigate the weighting of top-down (TD) and bottom-up (BU) information in guiding human search behavior, we manipulate the proportions of BU and TD components in a saliency-based model. The model is biologically plausible, and implements an artificial retina and neuronal population code. The BU component is based on feature-contrast. The TD component is defined by a feature-template match to a stored target representation. We compared the model?s behavior at different mixtures of TD and BU components to the eye movement behavior of human observers performing the identical search task. We found that a purely TD model provides a much closer match to human behavior than any mixture model using BU information. Only when biological constraints are removed (e.g., eliminating the retina) did a BU/TD mixture model begin to approximate human behavior.

Publications

The Role of Top-down and Bottom-up Processes in Guiding Eye Movements During Visual Search, Greg Zelinsky, Wei Zhang, Bing Yu, Xin Chen, Dimitris Samaras, In Advances in Neural Information Processing (NIPS) 2005, Vancouver, Canada, pp. 1569-1576.

The Role of Top-down and Bottom-up Processes in Guiding Eye Movements During Visual Search, Greg Zelinsky, Wei Zhang, Bing Yu, Xin Chen, Dimitris Samaras, In Advances in Neural Information Processing (NIPS) 2005, Vancouver, Canada, pp. 1569-1576.

[ BibTex ]

Object class recognition using multiple layer boosting with multiple features

[publications]

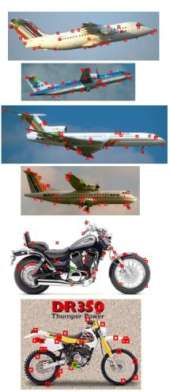

We combine local texture features (PCA-SIFT), global features (shape context), and spatial features within a single multi-layer AdaBoost model of object class recognition. The first layer selects PCA-SIFT and shape context features and combines the two feature types to form a strong classifier. Although previous approaches have used either feature type to train an AdaBoost model, our approach is the first to combine these complementary sources of information into a single feature pool and to use Adaboost to select those features most important for class recognition. The second layer adds to these local and global descriptions information about the spatial relationships between features. Through comparisons to the training sample, we first find the most prominent local features in Layer 1, then capture the spatial relationships between these features in Layer 2. Rather than discarding this spatial information, we therefore use it to improve the strength of our classifier. We compared our method to [4, 12, 13] and in all cases our approach outperformed these previous methods using a popular benchmark for object class recognition [4]. ROC equal error rates approached 99%. We also tested our method using a dataset of images that better equates the complexity between object and non-object images, and again found that our approach outperforms previous methods.

Publications

Object class recognition using multiple layer boosting with multiple features, Wei Zhang, Bing Yu, Greg Zelinsky, Dimitris Samaras. In CVPR, pp II:323-330, 2005.

Object class recognition using multiple layer boosting with multiple features, Wei Zhang, Bing Yu, Greg Zelinsky, Dimitris Samaras. In CVPR, pp II:323-330, 2005.

[ BibTex ]