Neural Face Editing with Intrinsic Image Disentangling |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

Abstract

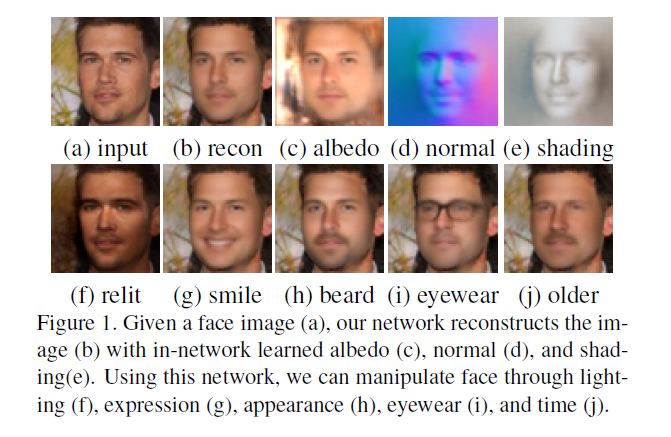

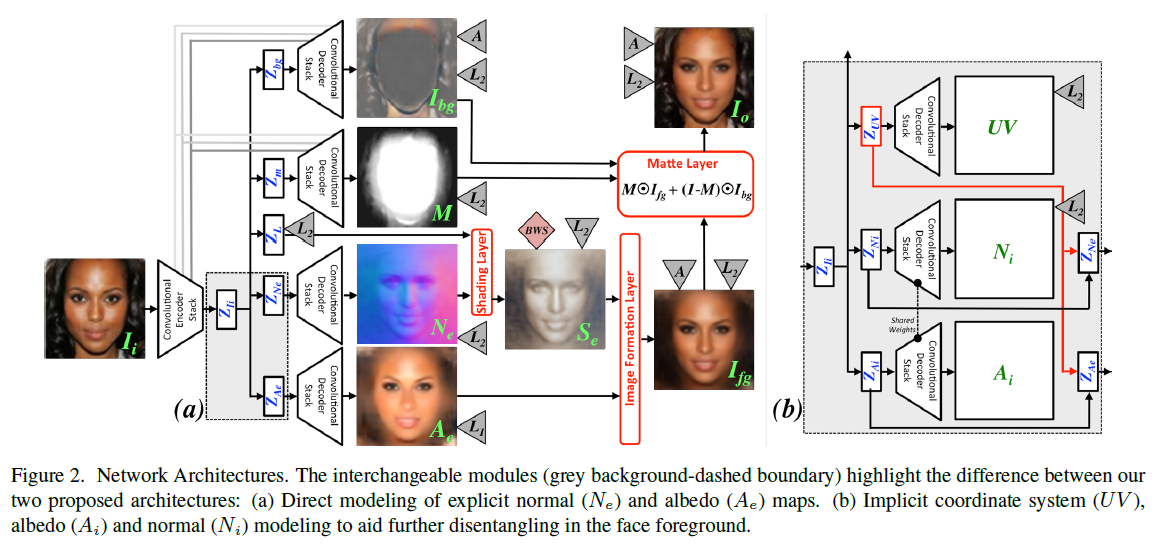

Traditional face editing methods often require a number of sophisticated and task specific algorithms to be applied one after the other — a process that is tedious, fragile, and computationally intensive. In this paper, we propose an end-to-end generative network that infers a face-specific disentangled representation of intrinsic face properties, including shape (i.e. normals), albedo, and lighting, and an alpha matte. We show that this network can be trained on “in-the-wild” images by incorporating an in-network physically-based image formation module and appropriate loss functions. Our disentangling latent representation allows for semantically relevant edits, where one aspect of facial appearance can be manipulated while keeping orthogonal properties fixed, and we demonstrate its use for a number of facial editing applications. | |

Network Architecture

| |

Paper

Neural Face Editing with Intrinsic Image Disentangling. Zhixin Shu, Ersin Yumer, Sunil Hadap, Kalyan Sunkavalli, Eli Shechtman, Dimitris Samaras, IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2017.

| |

Supplementary Material Supplemental Document

(PDF) Data

(coming soon) Code

(coming soon) | |

Acknowledgement This work started when Zhixin Shu was an intern at Adobe Research. This work was supported by a gift from Adobe, NSF IIS-1161876, the Stony Brook SensorCAT and the Partner University Fund 4DVision project. |